Part I: Getting Started

- Chapter 1: What Is Machine Learning?

- Chapter 2: Introduction to R and RStudio

- Chapter 3: Managing Data

Chapter 1: What Is Machine Learning?

Welcome to the world of machine learning! You're about to embark upon an exciting adventure discovering how data scientists use algorithms to uncover knowledge hidden within the troves of data that businesses, organizations, and individuals generate every day.

If you're like us, you often find yourself in situations where you are facing a mountain of data that you're certain contains important insights, but you just don't know how to extract that needle of knowledge from the proverbial haystack. That's where machine learning can help. This book is dedicated to providing you with the knowledge and skills you need to harness the power of machine learning algorithms. You'll learn about the different types of problems that are well-suited for machine learning solutions and the different categories of machine learning techniques that are most appropriate for tackling different types of problems.

Most importantly, we're going to approach this complex, technical field with a practical mind-set. In this book, our purpose is not to dwell on the intricate mathematical details of these algorithms. Instead, we'll focus on how you can put those algorithms to work for you immediately. We'll also introduce you to the R programming language, which we believe is particularly well-suited to approaching machine learning problems from a practical standpoint. But don't worry about programming or R for now. We'll get to that in Chapter 2. For now, let's dive in and get a better understanding of how machine learning works.

By the end of this chapter, you will have learned the following:

- How machine learning allows the discovery of knowledge in data

- How unsupervised learning, supervised learning, and reinforcement learning techniques differ from each other

- How classification and regression problems differ from each other

- How to measure the effectiveness of machine learning algorithms

- How cross-validation improves the accuracy of machine learning models

DISCOVERING KNOWLEDGE IN DATA

Our goal in the world of machine learning is to use algorithms to discover knowledge in our datasets that we can then apply to help us make informed decisions about the future. That's true regardless of the specific subject-matter expertise where we're working, as machine learning has applications across a wide variety of fields. For example, here are some cases where machine learning commonly adds value:

- Segmenting customers and determining the marketing messages that will appeal to different customer groups

- Discovering anomalies in system and application logs that may be indicative of a cybersecurity incident

- Forecasting product sales based on market and environmental conditions

- Recommending the next movie that a customer might want to watch based on their past activity and the preferences of similar customers

- Setting prices for hotel rooms far in advance based on forecasted demand

Of course, those are just a few examples. Machine learning can bring value to almost every field where discovering previously unknown knowledge is useful—and we challenge you to think of a field where knowledge doesn't offer an advantage!

Introducing Algorithms

As we proceed throughout this book, you'll see us continually referring to machine learning techniques as algorithms. This is a term from the world of computer science that comes up again and again in the world of data science, so it's important that you understand it. While the term sounds technically complex, the concept of an algorithm is actually straightforward, and we'd venture to guess that you use some form of an algorithm almost every day.

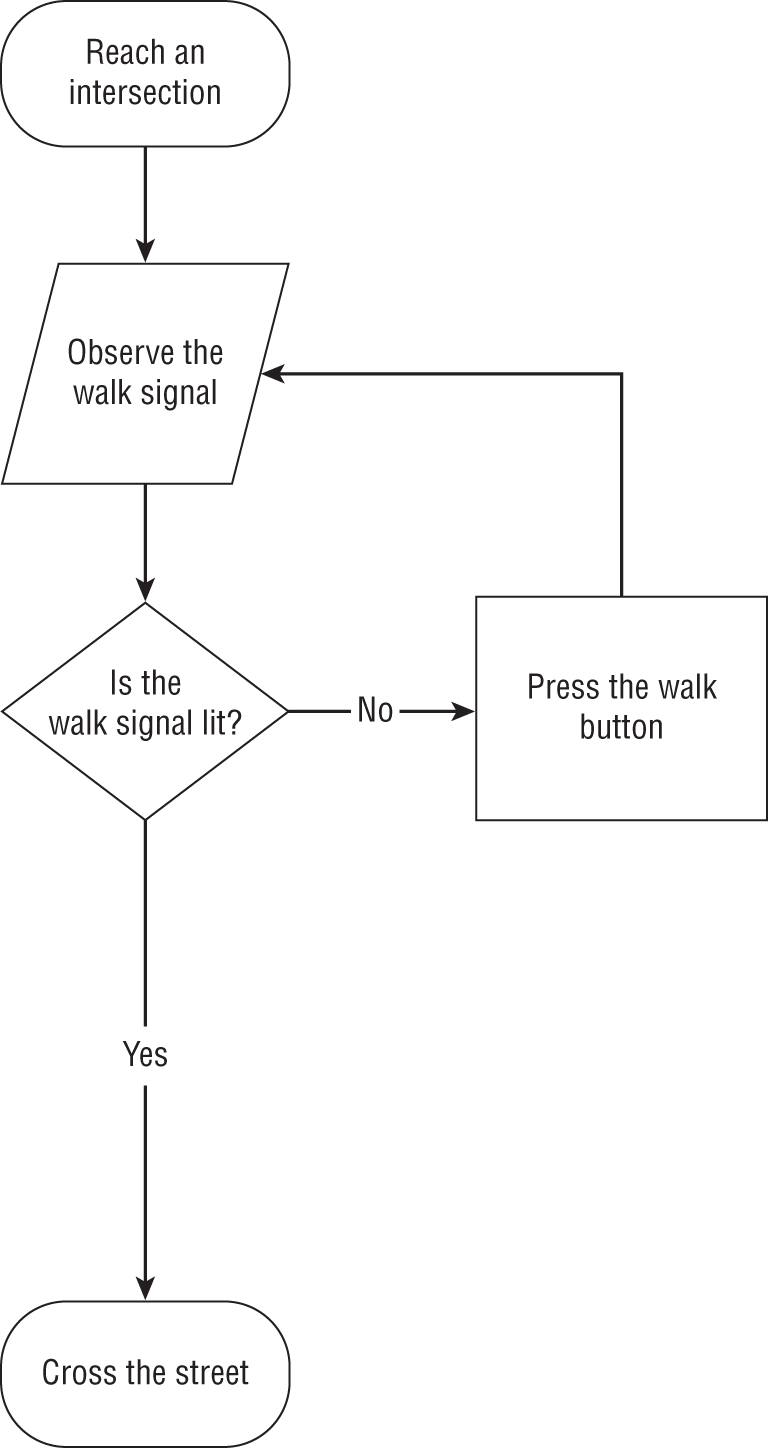

An algorithm is, quite simply, a set of steps that you follow when carrying out a process. Most commonly, we use the term when we're referring to the steps that a computer follows when it is carrying out a computational task, but we can think of many things that we do each day as algorithms. For example, when we are walking the streets of a large city and we reach an intersection, we follow an algorithm for crossing the street. Figure 1.1 shows an example of how this process might work.

Of course, in the world of computer science, our algorithms are more complex and are implemented by writing software, but we can think of them in this same way. An algorithm is simply a series of precise observations, decisions, and instructions that tell the computer how to carry out an action. We design machine learning algorithms to discover knowledge in our data. As we progress through this book, you'll learn about many different types of machine learning algorithms and how they work to achieve this goal in very different ways.

Figure 1.1 Algorithm for crossing the street

Artificial Intelligence, Machine Learning, and Deep Learning

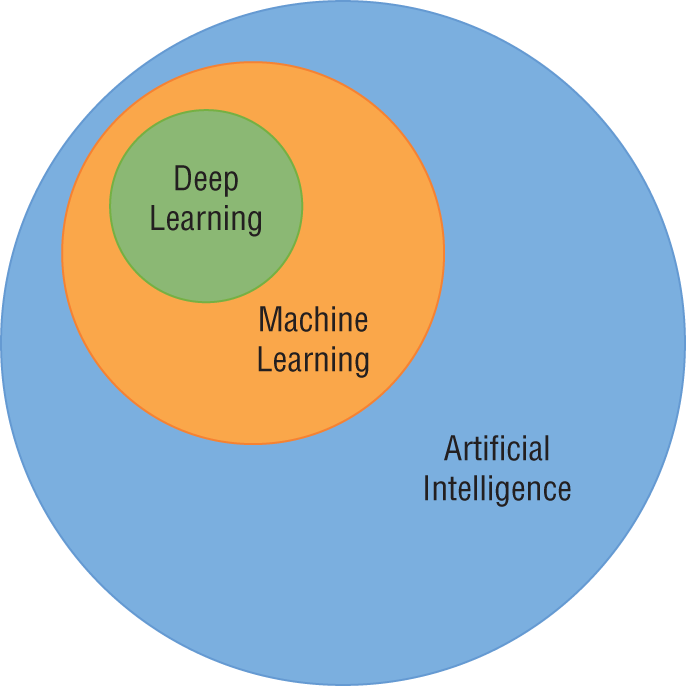

We hear the terms artificial intelligence, machine learning, and deep learning being used almost interchangeably to describe any sort of technique where computers are working with data. Now that you're entering the world of data science, it's important to have a more precise understanding of these terms.

- Artificial intelligence (AI) includes any type of technique where we are attempting to get a computer system to imitate human behavior. As the name implies, we are trying to ask computer systems to artificially behave as if they were intelligent. Now, of course, it's not possible for a modern computer to function at the level of complex reasoning found in the human mind, but we can try to mimic some small portions of human behavior and judgment.

- Machine learning (ML) is a subset of artificial intelligence techniques that attempt to apply statistics to data problems in an effort to discover new knowledge by generalizing from examples. Or, in other terms, machine learning techniques are artificial intelligence techniques designed to learn.

- Deep learning is a further subdivision of machine learning that uses a set of complex techniques, known as neural networks, to discover knowledge in a particular way. It is a highly specialized subfield of machine learning that is most commonly used for image, video, and sound analysis.

Figure 1.2 shows the relationships between these fields. In this book, we focus on machine learning techniques. Specifically, we focus on the categories of machine learning that do not fit the definition of deep learning.

MACHINE LEARNING TECHNIQUES

The machine learning techniques that we discuss in this book fit into two major categories. Supervised learning algorithms learn patterns based on labeled examples of past data. Unsupervised learning algorithms seek to uncover patterns without the assistance of labeled data. Let's take a look at each of these techniques in more detail.

Figure 1.2 The relationship between artificial intelligence, machine learning, and deep learning

Supervised Learning

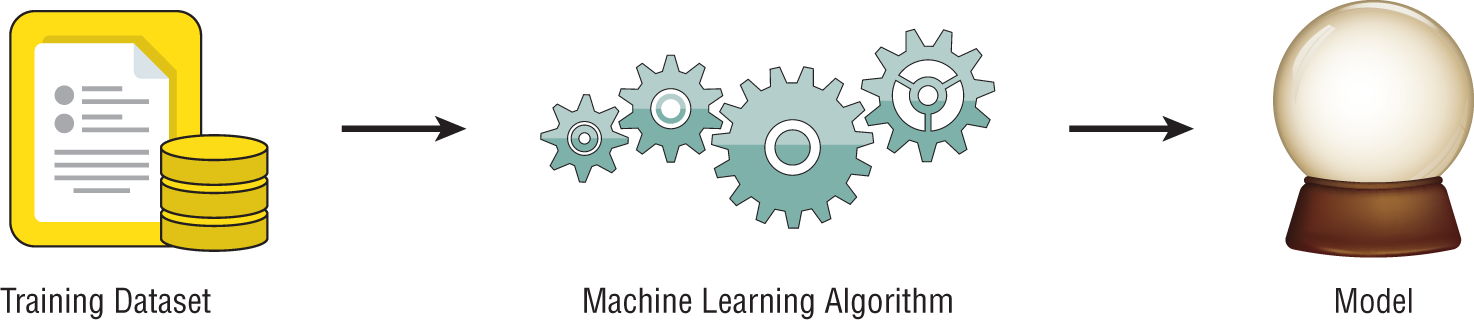

Supervised learning techniques are perhaps the most commonly used category of machine learning algorithms. The purpose of these techniques is to use an existing dataset to generate a model that then helps us make predictions about future, unlabeled data. More formally, we provide a supervised machine learning algorithm with a training dataset as input. The algorithm then uses that training data to develop a model as its output, as shown in Figure 1.3.

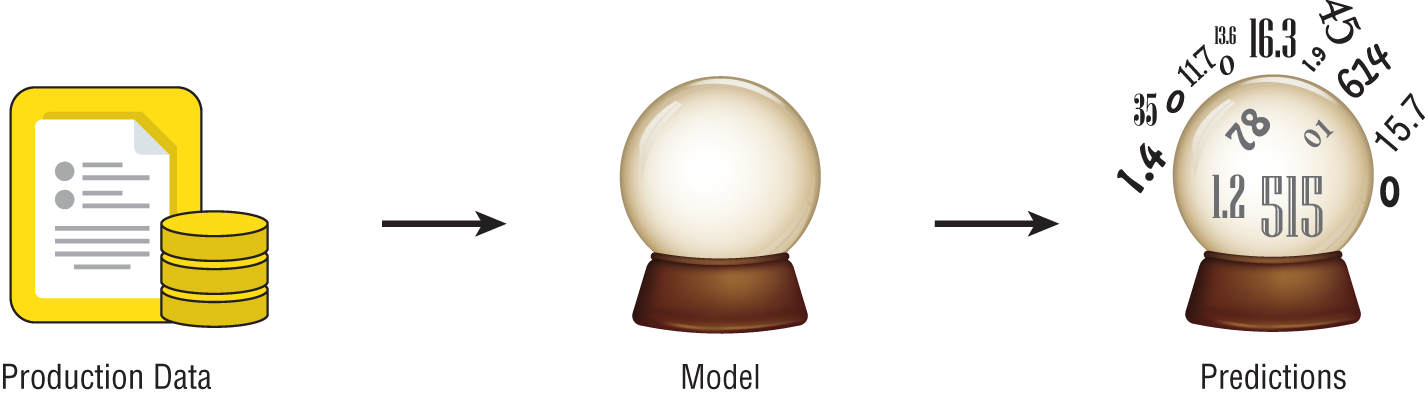

You can think of the model produced by a supervised machine learning algorithm as sort of a crystal ball—once we have it, we can use it to make predictions about our data. Figure 1.4 shows how this model functions. Once we have it, we can take any new data element that we encounter and use the model to make a prediction about that new element based on the knowledge it obtained from the training dataset.

The reason that we use the term supervised to describe these techniques is that we are using a training dataset to supervise the creation of our model. That training dataset contains labels that help us with our prediction task.

Let's reinforce that with a more concrete example. Consider a loan officer working at the car dealership shown in Figure 1.5. The salespeople at the dealership work with individual customers to sell them cars. The customers often don't have the necessary cash on hand to purchase a car outright, so they seek financing options. Our job is to match customers with the right loan product from three choices.

- Subprime loans have the most expensive interest rates and are offered to customers who are likely to miss payment deadlines or default on their loans.

- Top-shelf loans have the lowest interest rate and are offered to customers who are unlikely to miss payments and have an extremely high likelihood of repayment.

- Standard loans are offered to customers who fall in the middle of these two groups and have an interest rate that falls in between those two values.

Figure 1.3 Generic supervised learning model

Figure 1.4 Making predictions with a supervised learning model

We receive loan applications from salespeople and must make a decision on the spot. If we don't act quickly, the customer may leave the store, and the business will be lost to another dealership. If we offer a customer a higher risk loan than they would normally qualify for, we might lose their business to another dealership offering a lower interest rate. On the other hand, if we offer a customer a lower interest rate than they deserve, we might not profit on the transaction after they later default.

Our current method of doing business is to review the customer's credit report and make decisions about loan categories based on our years of experience in the role. We've “seen it all” and can rely upon our “gut instinct” to make these important business decisions. However, as budding data scientists, we now realize that there might be a better way to solve this problem using machine learning.

Our car dealership can use supervised machine learning to assist with this task. First, they need a training dataset containing information about their past customers and their loan repayment behavior. The more data they can include in the training dataset, the better. If they have several years of data, that would help develop a high-quality model.

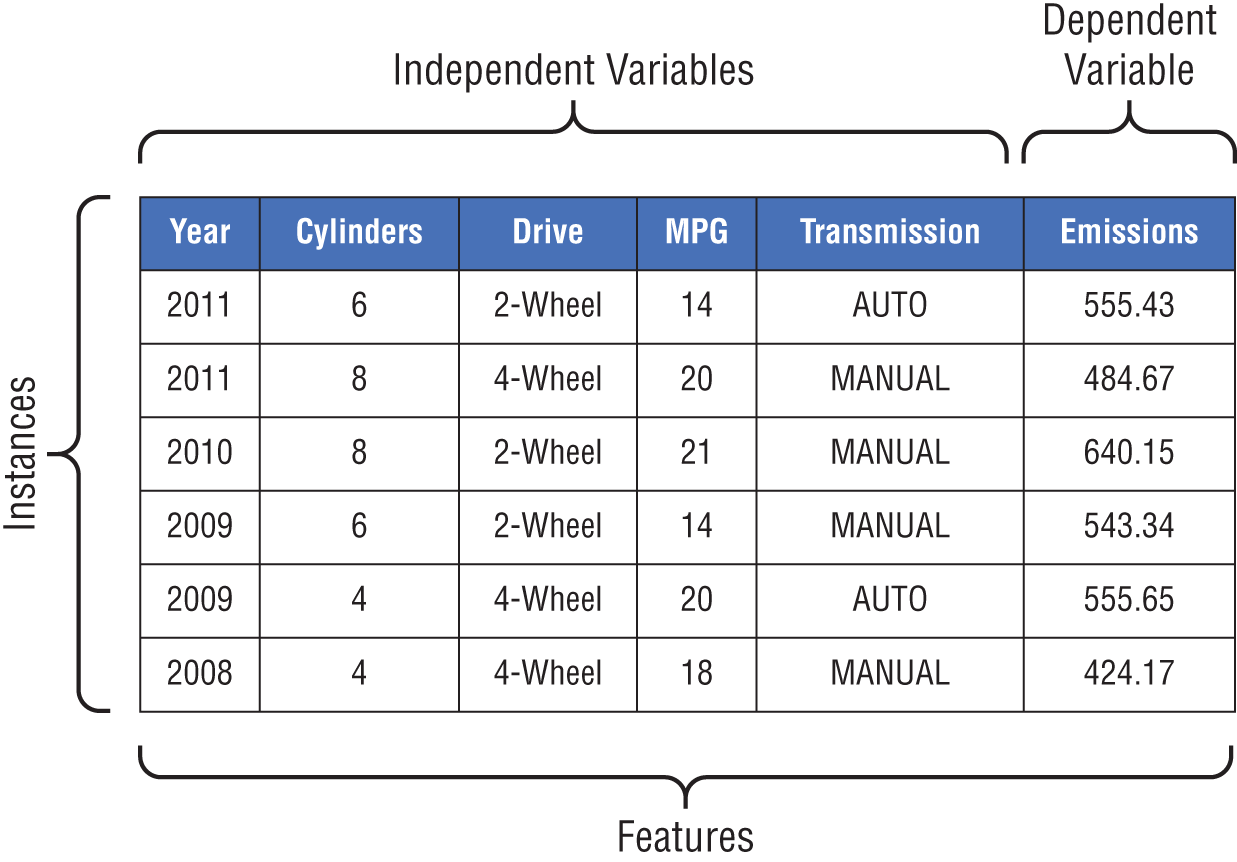

The dataset might contain a variety of information about each customer, such as the customer's approximate age, credit score, home ownership status, and vehicle type. Each of these data points is known as a feature about the customer, and they will become the inputs to the machine learning model created by the algorithm. The dataset also needs to contain labels for each one of the customers in the training dataset. These labels are the values that we'd like to predict using our model. In this case, we have two labels: default and repaid. We label each customer in our training dataset with the appropriate label for their loan status. If they repaid their loan in full, they are given the “repaid” label, while those who failed to repay their loans are given the “default” label.

Figure 1.5 Using machine learning to classify car dealership customers

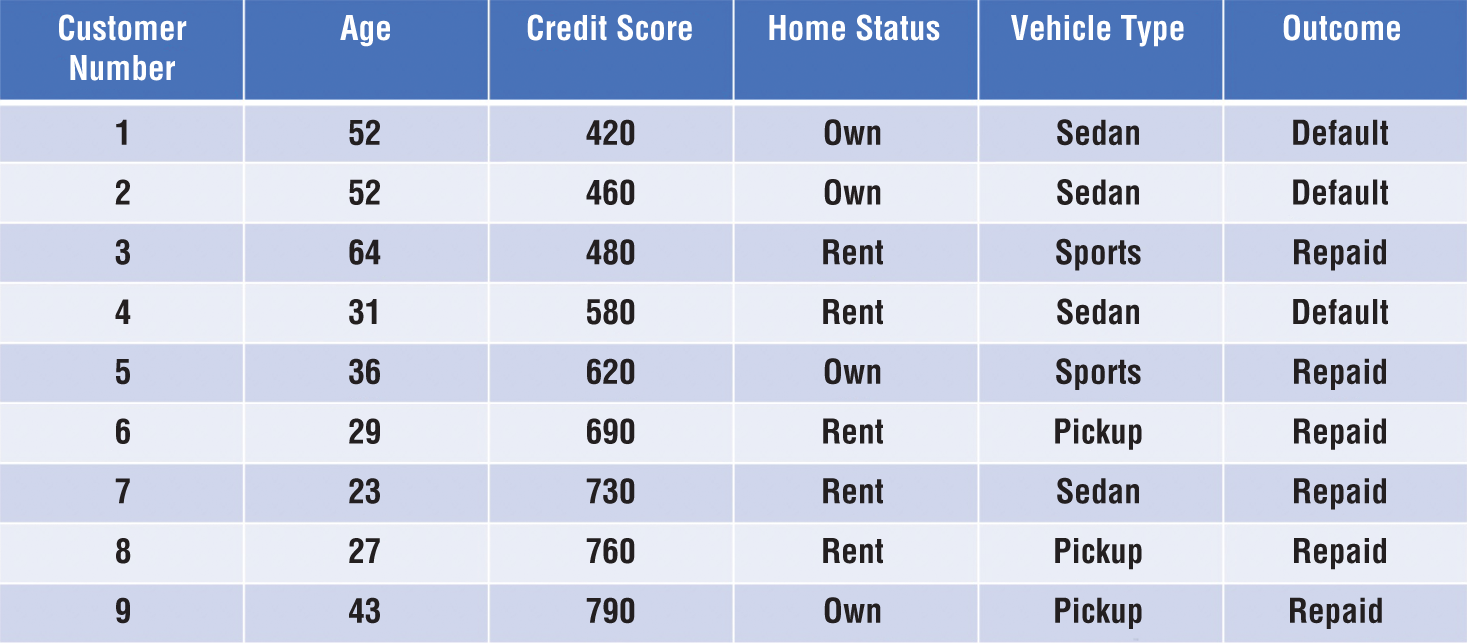

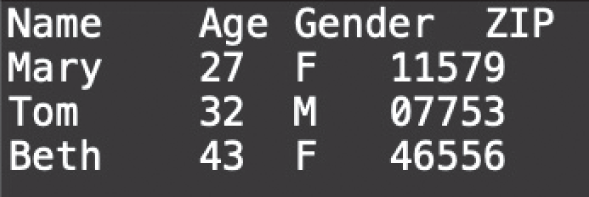

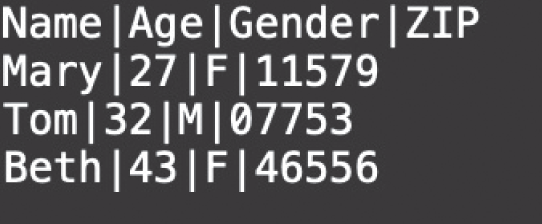

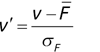

A small segment of the resulting dataset appears in Figure 1.6. Notice two things about this dataset. First, each row in the dataset corresponds to a single customer, and those customers are all past customers who have completed their loan terms. We know the outcomes of the loans made to each of these customers, providing us with the labels we need to train a supervised learning model. Second, each of the features included in the model are characteristics that are available to the loan officer at the time they are making a loan decision. That's crucial to creating a model that is effective for our given problem. If the model included a feature that specified whether a customer lost his or her job during the loan term, that would likely provide us with accurate results, but the loan officer would not be able to actually use that model because they would have no way of determining this feature for a customer at the time of a loan decision. How would they know if the customer is going to lose their job over the term of the loan that hasn't started yet?

Figure 1.6 Dataset of past customer loan repayment behavior

If we use a machine learning algorithm to generate a model based on this data, it might pick up on a few characteristics of the dataset that may also be apparent to you upon casual inspection. First, most people with a credit score under 600 who have financed a car through us in the past defaulted on that loan. If we use that characteristic alone to make decisions, we'd likely be in good shape. However, if we look at the data carefully, we might realize that we could realize an even better fit by saying that anyone who has a credit score under 600 and purchased a sedan is likely to default. That type of knowledge, when generated by an algorithm, is a machine learning model!

The loan officer could then deploy this machine learning model by simply following these rules to make a prediction each time someone applies for a loan. If the next customer through the door has a credit score of 780 and is purchasing a sports car, as shown in Figure 1.7, they should be given a top-shelf loan because it is quite unlikely that they will default. If the customer has a credit score of 410 and is purchasing a sedan, we'd definitely want to slot them into a subprime loan. Customers who fall somewhere in between these extremes would be suited for a standard loan.

Now, this was a simplistic example. All of the customers in our example fit neatly into the categories we described. This won't happen in the real world, of course. Our machine learning algorithms will have imperfect data that doesn't have neat, clean divisions between groups. We'll have datasets with many more observations, and our algorithms will inevitably make mistakes. Perhaps the next high credit-scoring young person to walk into the dealership purchasing a sports car later loses their job and defaults on the loan. Our algorithm would make an incorrect prediction. We talk more about the types of errors made by algorithms later in this chapter.

Figure 1.7 Applying the machine learning model

Unsupervised Learning

Unsupervised learning techniques work quite differently. While supervised techniques train on labeled data, unsupervised techniques develop models based on unlabeled training datasets. This changes the nature of the datasets that they are able to tackle and the models that they produce. Instead of providing a method for assigning labels to input based on historical data, unsupervised techniques allow us to discover hidden patterns in our data.

One way to think of the difference between supervised and unsupervised algorithms is that supervised algorithms help us assign known labels to new observations while unsupervised algorithms help us discover new labels, or groupings, of the observations in our dataset.

For example, let's return to our car dealership and imagine that we're now working with our dataset of customers and want to develop a marketing campaign for our service department. We suspect that the customers in our database are similar to each other in ways that aren't as obvious as the types of cars that they buy and we'd like to discover what some of those groupings might be and use them to develop different marketing messages.

Unsupervised learning algorithms are well-suited to this type of open-ended discovery task. The car dealership problem that we described is more generally known as the market segmentation problem, and there is a wealth of unsupervised learning techniques designed to help with this type of analysis. We talk about how organizations use unsupervised clustering algorithms to perform market segmentation in Chapter 12.

Let's think of another example. Imagine that we manage a grocery store and are trying to figure out the optimal placement of products on the shelves. We know that customers often run into our store seeking to pick up some common staples, such as milk, bread, meat, and produce. Our goal is to design the store so that impulse purchases are near each other in the store. As seen in Figure 1.8, we want to place the cookies right next to the milk so someone who came into the store to purchase milk will see them and think “Those cookies would be delicious with a glass of this milk!”

Figure 1.8 Strategically placing items in a grocery store based on unsupervised learning

The problem of determining which items customers frequently purchase together is also a well-known problem in machine learning known as the market basket problem. We talk about how data scientists use association rules approaches to tackle the market basket problem in Chapter 11.

Note

You may also hear about a third type of machine learning algorithm known as reinforcement learning. These algorithms seek to learn based on trial and error, similar to the way that a young child learns the rules of a home by being rewarded and punished. Reinforcement learning is an interesting technique but is beyond the scope of this book.

In the previous section, we described ways to group algorithms based on the types of data that they use for training. Algorithms that use labeled training datasets are known as supervised algorithms because their training is “supervised” by the labels while those that use unlabeled training datasets are known as unsupervised algorithms because they are free to learn whatever patterns they happen to discover, without “supervision.” Think of this categorization scheme as describing how machine learning algorithms learn.

We can also categorize our algorithms based on what they learn. In this book, we discuss three major types of knowledge that we can learn from our data. Classification techniques train models that allow us to predict membership in a category. Regression techniques allow us to predict a numeric result. Similarity learning techniques help us discover the ways that observations in our dataset resemble and differ from each other.

Classification Techniques

Classification techniques use supervised machine learning to help us predict a categorical response. That means that the output of our model is a non-numeric label or, more formally, a categorical variable. This simply means that the variable takes on discrete, non-numeric values, rather than numeric values. Here are some examples of categorical variables with some possible values they might take on:

- Educational degree obtained (none, bachelor's, master's, doctorate)

- Citizenship (United States, Ireland, Nigeria, China, Australia, South Korea)

- Blood type (A+, A-, B+, B-, AB+, AB-, O+, O-)

- Political party membership (Democrat, Republican, Independent)

- Customer status (current customer, past customer, noncustomer)

For example, earlier in this chapter, we discussed a problem where managers at a car dealership needed the ability to predict loan repayment. This is an example of a classification problem because we are trying to assign each customer to one of two categories: repaid or default.

We encounter all types of classification problems in the real world. We might try to determine which of three promotional offers would be most appealing to a potential customer. This is a classification problem where the categories are the three different offers.

Similarly, we might want to look at people attempting to log on to our computer systems and predict whether they are a legitimate user or a hacker seeking to violate the system's security policies. This is also a classification problem where we are trying to assign each login attempt to the category of “legitimate user” or “hacker.”

Regression Techniques

Regression techniques use supervised machine learning techniques to help us predict a continuous response. Simply put, this means that the output of our model is a numeric value. Instead of predicting membership in a discrete set of categories, we are predicting the value of a numeric variable.

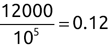

For example, a financial advisor seeking new clients might want to screen possible clients based on their income. If the advisor has a list of potential customers that does not include income explicitly, they might use a dataset of past contacts with known incomes to train a regression model that predicts the income of future contacts. This model might look something like this:

![]()

If the financial advisor encounters a new potential client, they can then use this formula to predict the person's income based on their age and years of education. For each year of age, they would expect the person to have $1,000 in additional annual income. Similarly, their income would increase $3,000 for each year of education beyond high school.

Regression models are quite flexible. We can plug in any possible value of age or income and come up with a prediction for that person's income. Of course, if we didn't have good training data, our prediction might not be accurate. We also might find that the relationship between our variables isn't explained by a simple linear technique. For example, income likely increases with age, but only up until a certain point. More advanced regression techniques allow us to build more complex models that can take these factors into account. We discuss those in Chapter 4.

Similarity Learning Techniques

Similarity learning techniques use machine learning algorithms to help us identify common patterns in our data. We might not know exactly what we're trying to discover, so we allow the algorithm to explore the dataset looking for similarities that we might not have already predicted.

We've already mentioned two similarity learning techniques in this chapter. Association rules techniques, discussed more fully in Chapter 11, allow us to solve problems that are similar to the market basket problem—which items are commonly purchased together. Clustering techniques, discussed more fully in Chapter 12, allow us to group observations into clusters based on the similar characteristics they possess.

Association rules and clustering are both examples of unsupervised uses of similarity learning techniques. It's also possible to use similarity learning in a supervised manner. For example, nearest neighbor algorithms seek to assign labels to observations based on the labels of the most similar observations in the training dataset. We discuss those more in Chapter 6.

MODEL EVALUATION

Before beginning our discussion of specific machine learning algorithms, it's also helpful to have an idea in mind of how we will evaluate the effectiveness of our algorithms. We're going to cover this topic in much more detail throughout the book, so this is just to give you a feel for the concept. As we work through each machine learning technique, we'll discuss evaluating its performance against a dataset. We'll also have a more complete discussion of model performance evaluation in Chapter 9.

Until then, the important thing to realize is that some algorithms will work better than others on different problems. The nature of the dataset and the nature of the algorithm will dictate the appropriate technique.

In the world of supervised learning, we can evaluate the effectiveness of an algorithm based on the number and/or magnitude of errors that it makes. For classification problems, we often look at the percentage of times that the algorithm makes an incorrect categorical prediction, or the misclassification rate. Similarly, we can look at the percentage of predictions that were correct, known as the algorithm's accuracy. For regression problems, we often look at the difference between the values predicted by the algorithm and the actual values.

Note

It only makes sense to talk about this type of evaluation when we're referring to supervised learning techniques where there actually is a correct answer. In unsupervised learning, we are detecting patterns without any objective guide, so there is no set “right” or “wrong” answer to measure our performance against. Instead, the effectiveness of an unsupervised learning algorithm lies in the value of the insight that it provides us.

Classification Errors

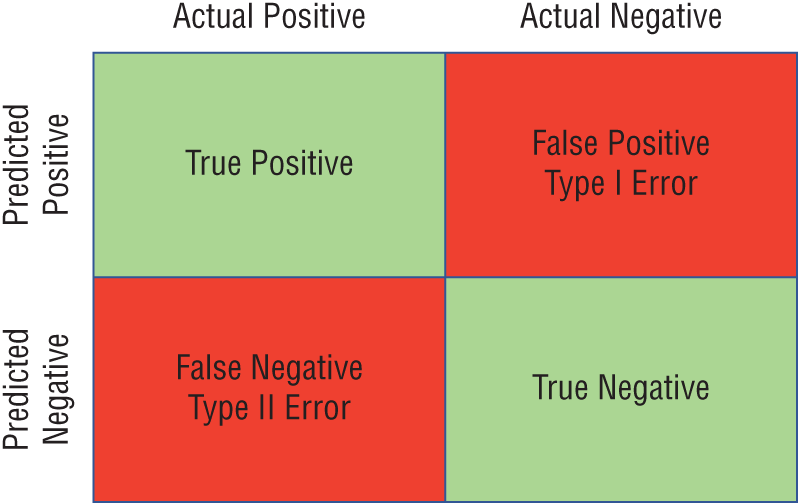

Many classification problems seek to predict a binary value identifying whether an observation is a member of a class. We refer to cases where the observation is a member of the class as positive cases and cases where the observation is not a member of the class as negative cases.

For example, imagine we are developing a model designed to predict whether someone has a lactose intolerance, making it difficult for them to digest dairy products. Our model might include demographic, genetic, and environmental factors that are known or suspected to contribute to lactose intolerance. The model then makes predictions about whether individuals are lactose intolerant or not based on those attributes. Individuals predicted to be lactose intolerant are predicted positives, while those who are predicted to not be lactose intolerant (or, stated more simply, those who are predicted to be lactose tolerant) are predicted negatives. These predicted values come from our machine learning model.

There is also, however, a real-world truth. Regardless of what the model predicts, every individual person is either lactose intolerant or they are not. This real-world data determines whether the person is an actual positive or an actual negative. When the predicted value for an observation differs from the actual value for that same observation, an error occurs. There are two different types of error that may occur in a classification problem.

- False positive errors occur when the model labels an observation as predicted positive when it is, in reality, an actual negative. For example, if the model identifies someone as likely lactose intolerant while they are, in reality, lactose tolerant, this is a false positive error. False positive errors are also known as Type I errors.

- False negative errors occur when the model labels an observation as predicted negative when it is, in reality, an actual positive. In our lactose intolerance model, if the model predicts someone as lactose tolerant when they are, in reality, lactose intolerant, this is a false negative error. False negative errors are also known as Type II errors.

Similarly, we may label correctly predicted observations as true positives or true negatives, depending on their label. Figure 1.9 shows the types of errors in chart form.

Figure 1.9 Error types

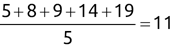

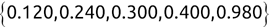

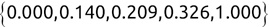

Of course the absolute numbers for false positive and false negative errors depend on the number of predictions that we make. Instead of using these magnitude-based measures, we measure the percentage of times that those errors occur. For example, the false positive rate (FPR) is the percentage of negative instances that were incorrectly identified as positive. We can compute this rate by dividing the number of false positives (FP) by the sum of the number of false positives and the number of true negatives (TN), or, as a formula:

![]()

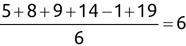

Similarly, we can compute the false negative rate (FNR) as follows:

![]()

There is no clear-cut rule about whether one type of error is better or worse than the other. This determination depends greatly on the type of problem being solved.

For example, imagine that we're using a machine learning algorithm to classify a large list of prospective customers as either people who will purchase our product (positive cases) or people who will not purchase our product (negative cases). We only spend the money to send the mailing to prospects labeled by the algorithm as positive.

In the case of a false positive mailing, you send a brochure to a customer who does not buy your product. You've lost the money spent on printing and mailing the brochure. In the case of a false negative result, you do not send a mailing to a customer who would have responded. You've lost the opportunity to sell your product to a customer. Which of these is worse? It depends on the cost of the mailing, the potential profit per customer, and other factors.

On the other hand, consider the use of a machine learning model to screen patients for the likelihood of cancer and then refer those patients with positive results for additional, more invasive testing. In the case of a false negative result, a patient who potentially has cancer is not sent for additional screening, possibly leaving an active disease untreated. This is clearly a very bad result.

False positive results are not without harm, however. If a patient is falsely flagged as potentially cancerous, they are subjected to unnecessary testing that is potentially costly and painful, consuming resources that could have been used on another patient. They are also subject to emotional harm while they are waiting for the new test results.

The evaluation of machine learning problems is a tricky proposition, and it cannot be done in isolation from the problem domain. Data scientists, subject-matter experts, and, in some cases, ethicists, should work together to evaluate models in light of the benefits and costs of each error type.

Regression Errors

The errors that we might make in regression problems are quite different because the nature of our predictions is different. When we assign classification labels to instances, we can be either right or wrong with our prediction. When we label a noncancerous tumor as cancerous, that is clearly a mistake. However, in regression problems, we are predicting a numeric value.

Consider the income prediction problem that we discussed earlier in this chapter. If we have an individual with an actual income of $45,000 annually and our algorithm's prediction is on the nose at exactly $45,000, that's clearly a correct prediction. If the algorithm predicts an income of $0 or $10,000,000, almost everyone would consider those predictions objectively wrong. But what about predictions of $45,001, $45,500, $46,000, or $50,000? Are those all incorrect? Are some or all of them close enough?

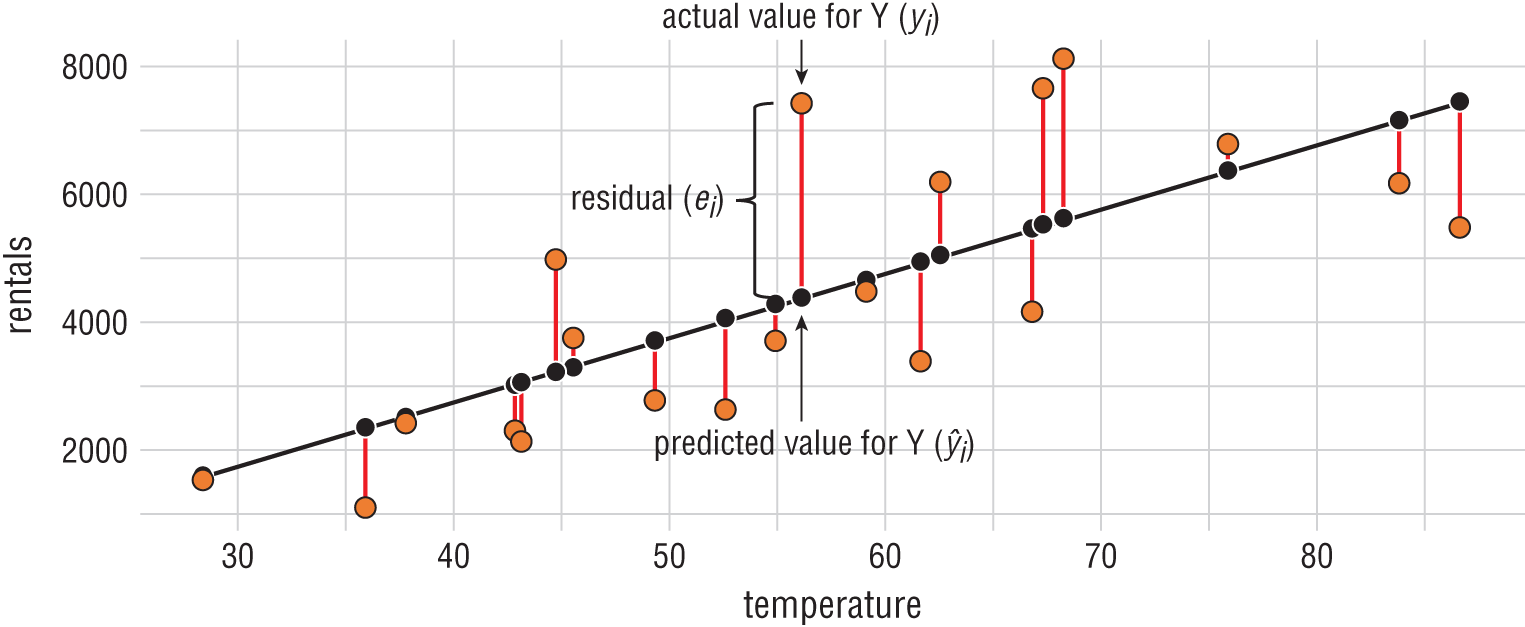

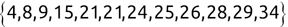

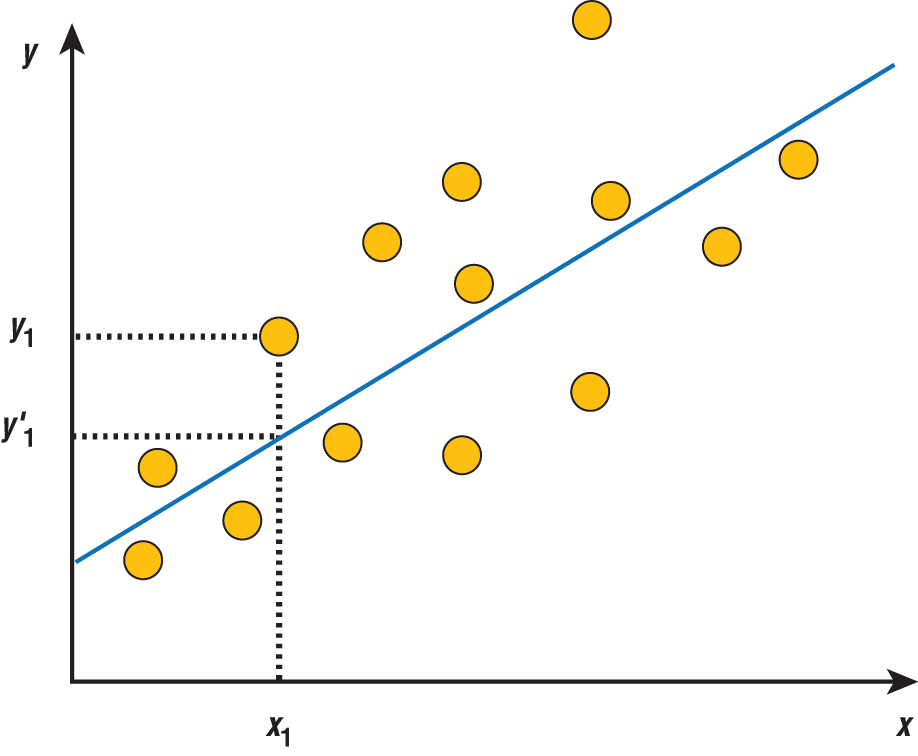

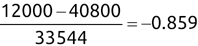

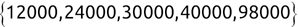

It makes more sense for us to evaluate regression algorithms based on the magnitude of the error in their predictions. We determine this by measuring the distance between the predicted value and the actual value. For example, consider the dataset shown in Figure 1.10.

Figure 1.10 Residual error

In this dataset, we're trying to predict the number of bicycle rentals that occur each day based on the average temperature that day. Bicycle rentals appear on the y-axis while temperature appears on the x-axis. The black line is a regression line that says that we expect bicycle rentals to increase as temperature increases. That black line is our model, and the black dots are predictions at specific temperature values along that line.

The orange dots represent real data gathered during the bicycle rental company's operations. That's the “correct” data. The red lines between the predicted and actual values are the magnitude of the error, which we call the residual value. The longer the line, the worse the algorithm performed on that dataset.

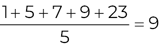

We can't simply add the residuals together because some of them are negative values that would cancel out the positive values. Instead, we square each residual value and then add those squared residuals together to get a performance measure called the residual sum of squares.

We revisit the concept of residual error, as well as this specific bicycle rental dataset, in Chapter 4.

Types of Error

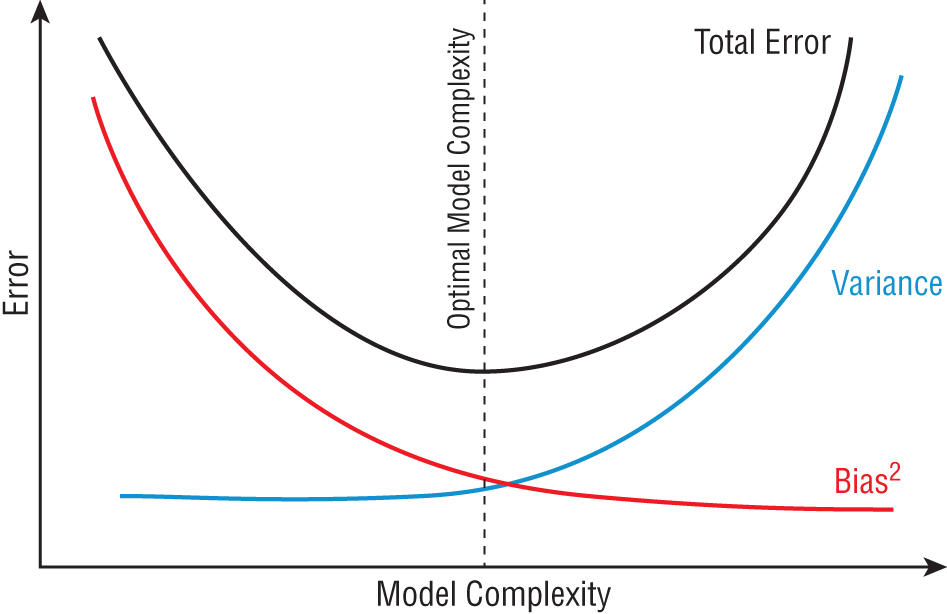

When we build a machine learning model for anything other than the most simplistic problems, the model will include some type of prediction error. This error comes in three different forms.

- Bias (in the world of machine learning) is the type of error that occurs due to our choice of a machine learning model. When the model type that we choose is unable to fit our dataset well, the resulting error is bias.

- Variance is the type of error that occurs when the dataset that we use to train our machine learning model is not representative of the entire universe of possible data.

- Irreducible error, or noise, occurs independently of the machine learning algorithm and training dataset that we use. It is error inherent in the problem that we are trying to solve.

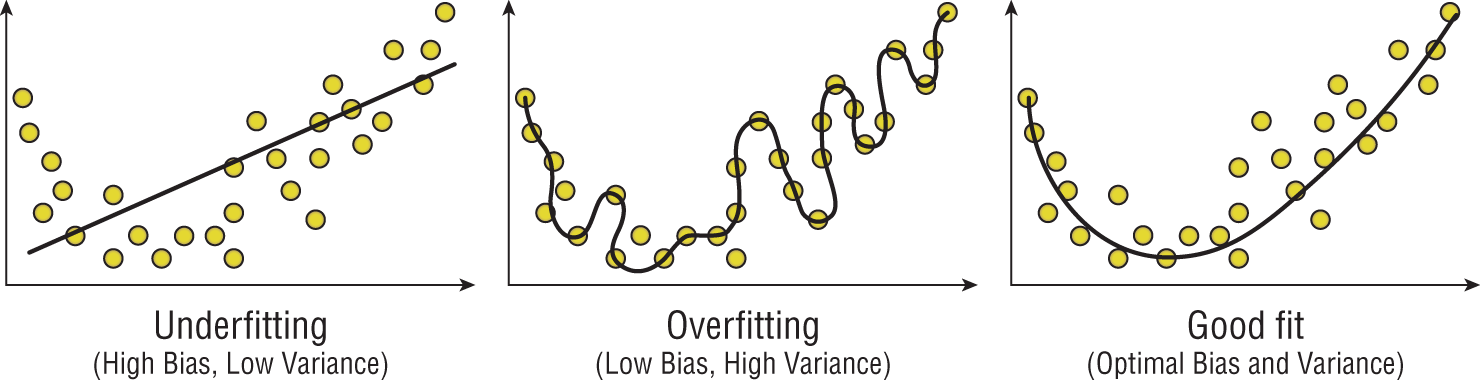

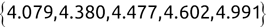

When we are attempting to solve a specific machine learning problem, we cannot do much to address irreducible error, so we focus our efforts on the two remaining sources of error: bias and variance. Generally speaking, an algorithm that exhibits high variance will have low bias, while a low-variance algorithm will have higher bias, as shown in Figure 1.11. Bias and variance are intrinsic characteristics of our models and coexist. When we modify our models to improve one, it comes at the expense of the other. Our goal is to find an optimal balance between the two.

In cases where we have high bias and low variance, we describe the model as underfitting the data. Let's take a look at a few examples that might help illustrate this point. Figure 1.12 shows a few attempts to use a function of two variables to predict a third variable. The leftmost graph in Figure 1.12 shows a linear model that underfits the data. Our data points are distributed in a curved manner, but our choice of a straight line (a linear model) limits the ability of the model to fit our dataset. There is no way that you can draw a straight line that will fit this dataset well. Because of this, the majority of the error in our approach is due to our choice of model and our dataset exhibits high bias.

The middle graph in Figure 1.12 illustrates the problem of overfitting, which occurs when we have a model with low bias but high variance. In this case, our model fits the training dataset too well. It's the equivalent of studying for a specific test (the training dataset) rather than learning a generalized solution to the problem. It's highly likely that when this model is used on a different dataset, it will not work well. Instead of learning the underlying knowledge, we studied the answers to a past exam. When we faced a new exam, we didn't have the knowledge necessary to figure out the answers.

The balance that we seek is a model that optimizes both bias and variance, such as the one shown in the rightmost graph of Figure 1.12. This model matches the curved nature of the distribution but does not closely follow the specific data points in the training dataset. It aligns with the dataset much better than the underfit model but does not closely follow specific points in the training dataset as the overfit model does.

Figure 1.11 The bias/variance trade-off

Figure 1.12 Underfitting, overfitting, and optimal fit

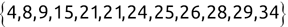

Partitioning Datasets

When we evaluate a machine learning model, we can protect against variance errors by using validation techniques that expose the model to data other than the data used to create the model. The point of this approach is to address the overfitting problem. Look back at the overfit model in Figure 1.12. If we used the training dataset to evaluate this model, we would find that it performed extremely well because the model is highly tuned to perform well on that specific dataset. However, if we used a new dataset to evaluate the model, we'd likely find that it performs quite poorly.

We can explore this issue by using a test dataset to assess the performance of our model. The test dataset is set aside at the beginning of the model development process specifically for the purpose of model assessment. It is not used in the training process, so it is not possible for the model to overfit the test dataset. If we develop a generalizable model that does not overfit the training dataset, it will also perform well on the test dataset. On the other hand, if our model overfits the training dataset, it will not perform well on the test dataset.

We also sometimes need a separate dataset to assist with the model development process. These datasets, known as validation datasets, are used to help develop the model in an iterative process, adjusting the parameters of the model during each iteration until we find an approach that performs well on the validation dataset. While it may be tempting to use the test dataset as the validation dataset, this approach reintroduces the potential of overfitting the test dataset, so we should use a third dataset for this purpose.

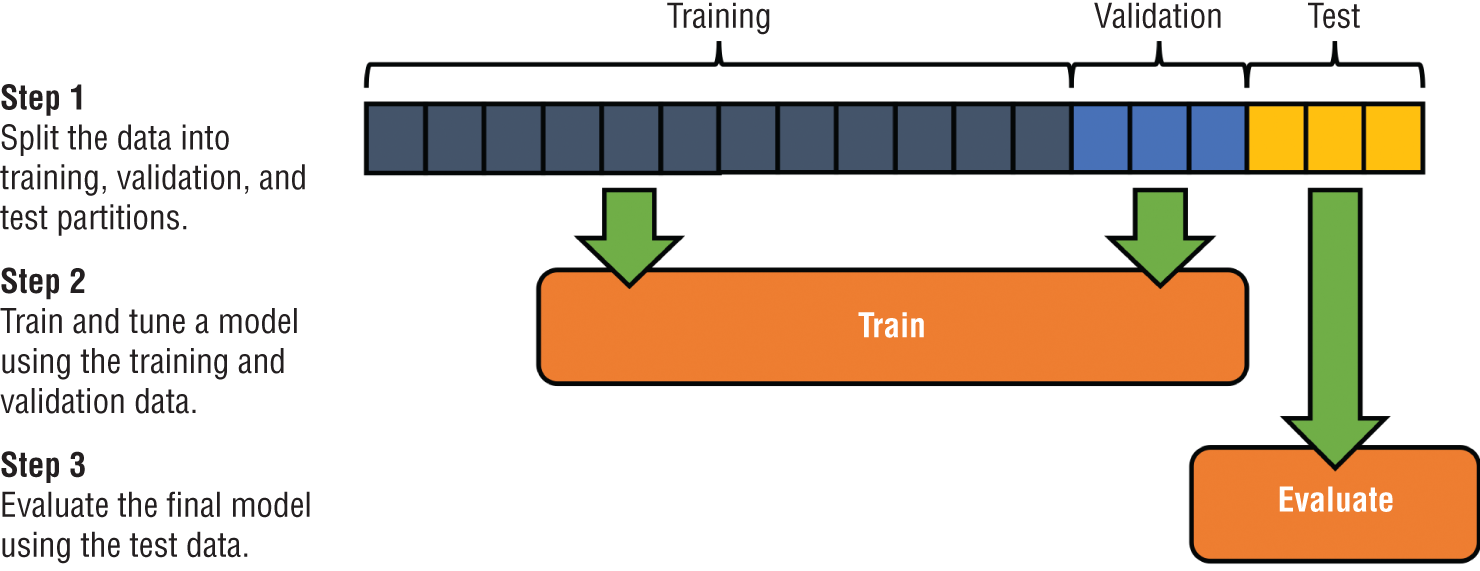

Holdout Method

The most straightforward approach to test and validation datasets is the holdout method. In this approach, illustrated in Figure 1.13, we set aside portions of the original dataset for validation and testing purposes at the beginning of the model development process. We use the validation dataset to assist in model development and then use the test dataset to evaluate the performance of the final model.

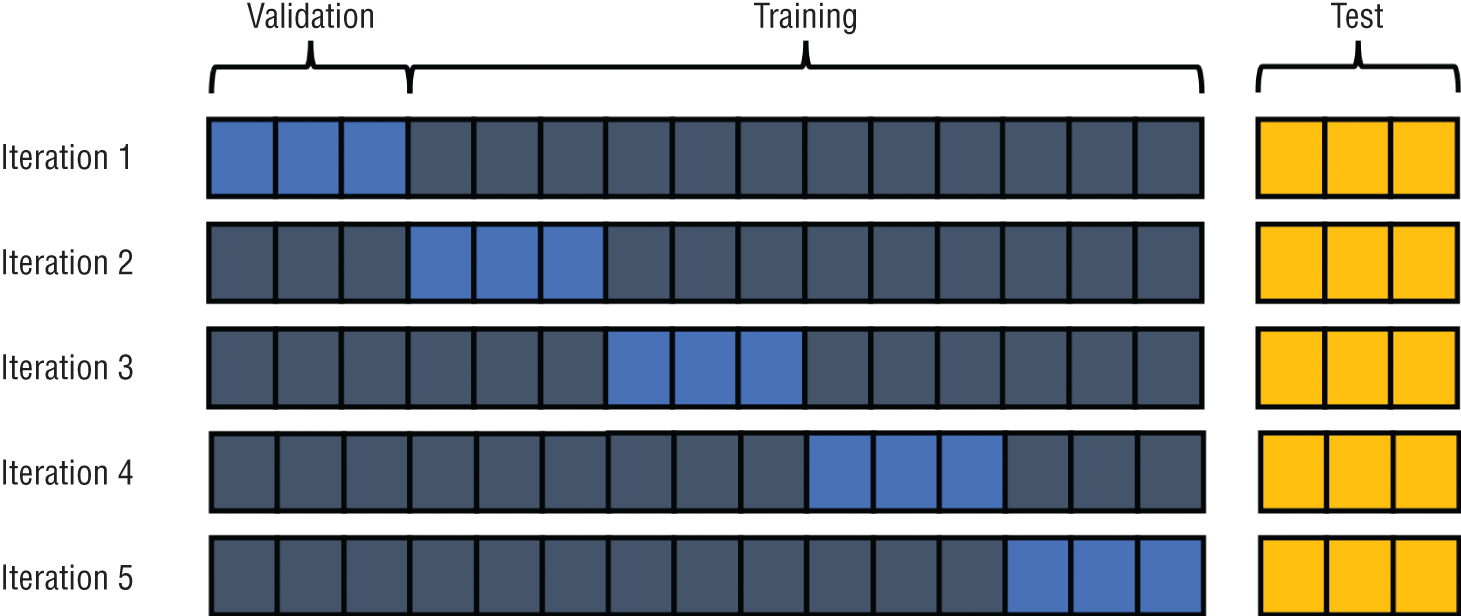

Cross-Validation Methods

There are also a variety of more advanced methods for creating validation datasets that perform repeated sampling of the data during an iterative approach to model development. These approaches, known as cross-validation techniques, are particularly useful for smaller datasets where it is undesirable to reserve a portion of the dataset for validation purposes.

Figure 1.14 shows an example of cross-validation. In this approach, we still set aside a portion of the dataset for testing purposes, but we use a different portion of the training dataset for validation purposes during each iteration of model development.

If this sounds complicated now, don't worry about it. We discuss the holdout method and cross-validation in greater detail when we get to Chapter 9. For now, you should just have a passing familiarity with these techniques.

Figure 1.13 Holdout method

Figure 1.14 Cross-validation method

EXERCISES

- Consider each of the following machine learning problems. Would the problem be best approached as a classification problem or a regression problem? Provide a rationale for your answer.

- Predicting the number of fish caught on a commercial fishing voyage

- Identifying likely adopters of a new technology

- Using weather and population data to predict bicycle rental rates

- Predicting the best marketing campaign to send a specific person

- You developed a machine learning algorithm that assesses a patient's risk of heart attack (a positive event) based on a number of diagnostic criteria. How would you describe each of the following events?

- Your model identifies a patient as likely to suffer a heart attack, and the patient does suffer a heart attack.

- Your model identifies a patient as likely to suffer a heart attack, and the patient does not suffer a heart attack.

- Your model identifies a patient as not likely to suffer a heart attack, and the patient does not suffer a heart attack.

- Your model identifies a patient as not likely to suffer a heart attack, and the patient does suffer a heart attack.

Chapter 2:Introduction to R and RStudio

Machine learning sits at the intersection of the worlds of statistics and software development. Throughout this book, we focus extensively on the statistical techniques used to unlock the value hidden within data. In this chapter, we provide you with the computer science tools that you will need to implement these techniques. In this book, we've chosen to do this using the R programming language. This chapter introduces the fundamental concepts of the R language that you will use consistently throughout the remainder of the book.

By the end of this chapter, you will have learned the following:

- The role that the R programming language plays in the world of data science and analytics

- How the RStudio integrated development environment (IDE) facilitates coding in R

- How to use packages to redistribute and reuse R code

- How to write, save, and execute your own basic R script

- The purpose of different data types in R

WELCOME TO R

The R programming language began in 1992 as an effort to create a special-purpose language for use in statistical applications. More than two decades later, the language has evolved into one of the most popular languages used by statisticians, data scientists, and business analysts around the world.

R gained rapid traction as a popular language for several reasons. First, it is available to everyone as a free, open source language developed by a community of committed developers. This approach broke the mold of past approaches to analytic tools that relied upon proprietary, commercial software that was often out of the financial reach of many individuals and organizations.

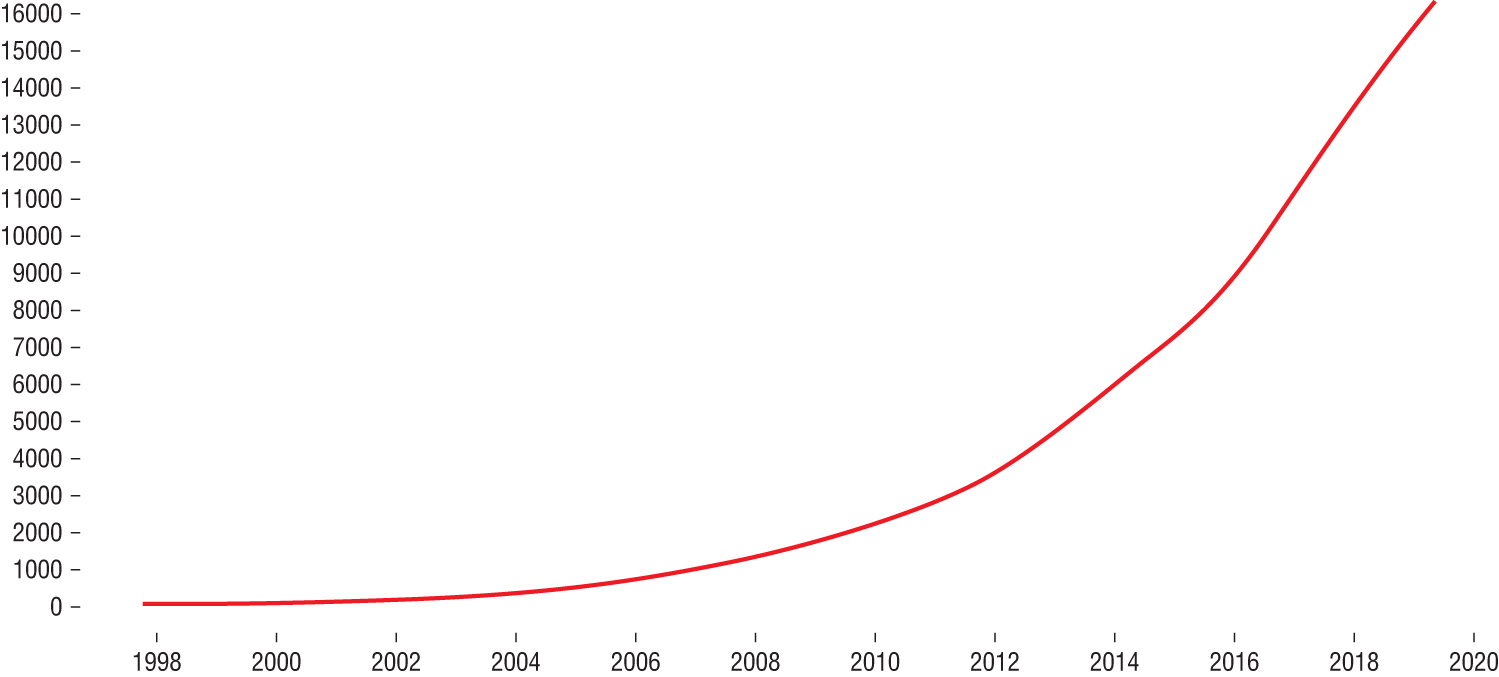

R also continues to grow in popularity because of its adoption by the creators of machine learning methods. Almost any new machine learning technique created today quickly becomes available to R users in a redistributable package, offered as open source code on the Comprehensive R Archive Network (CRAN), a worldwide repository of popular R code. Figure 2.1 shows the growth of the number of packages available through CRAN over time. As you can see, the growth took off significantly over the past decade.

Figure 2.1 Growth of the number of CRAN packages over time

It's also important to know that R is an interpreted language, rather than a compiled language. In an interpreted language, the code that you write is stored in a document called a script, and this script is the code that is directly executed by the system processing the code. In a compiled language, the source code written by a developer runs through a specialized program called a compiler, which converts the source code into executable machine language.

The fact that R is an interpreted language also means that you can execute R commands directly and see an immediate result. For example, you could execute the following simple command to add 1 and 1:

Code snippet

> 1+1[1] 2When you do this, the R interpreter immediately responds with the result: 2.

R AND RSTUDIO COMPONENTS

Our working environment for this book consists of two major components: the R programming language and the RStudio integrated development environment (IDE). While R is an open source language, RStudio is a commercial product designed to make using R easier.

The R Language

The open source R language is available as a free download from the R Project website at https://www.r-project.org. As of the writing of this book, the current version of R is version 3.6.0, code-named “Planting of a Tree.” R is generally written to be backward compatible, so if you are using a later version of R, you should not experience any difficulties following along with the code in this book.

Note

The code names assigned to different releases of R are quite interesting! Past code names included “Great Truth,” “Roasted Marshmallows,” “Wooden Christmas-Tree,” and “You Stupid Darkness.” These are all references to the Peanuts comic strip by Charles Schultz.

If you haven't done so already, now would be a good time to install the most recent version of R on your computer. Simply visit the R Project home page, click the CRAN link, and choose the CRAN mirror closest to your location. You'll then see a CRAN site similar to the one shown in Figure 2.2. Choose the download link for your operating system and run the installer after the download completes.

Figure 2.2 Comprehensive R Archive Network (CRAN) mirror site

RStudio

As an integrated development environment, RStudio offers a well-designed graphical interface to assist with your creation of R code. There's no reason that you couldn't simply open a text editor, write an R script, and then execute it directly using the open source R environment. But there's also no reason that you should do that! RStudio makes it much easier to manage your code, monitor its progress, and troubleshoot issues that might arise in your R scripts.

While R is an open source project, the RStudio IDE comes in different versions. There is an open source version of RStudio that is available for free, but RStudio also offers commercial versions of its products that come with enhanced support options and added features.

For the purposes of this book, the open source version of RStudio will be more than sufficient.

RStudio Desktop

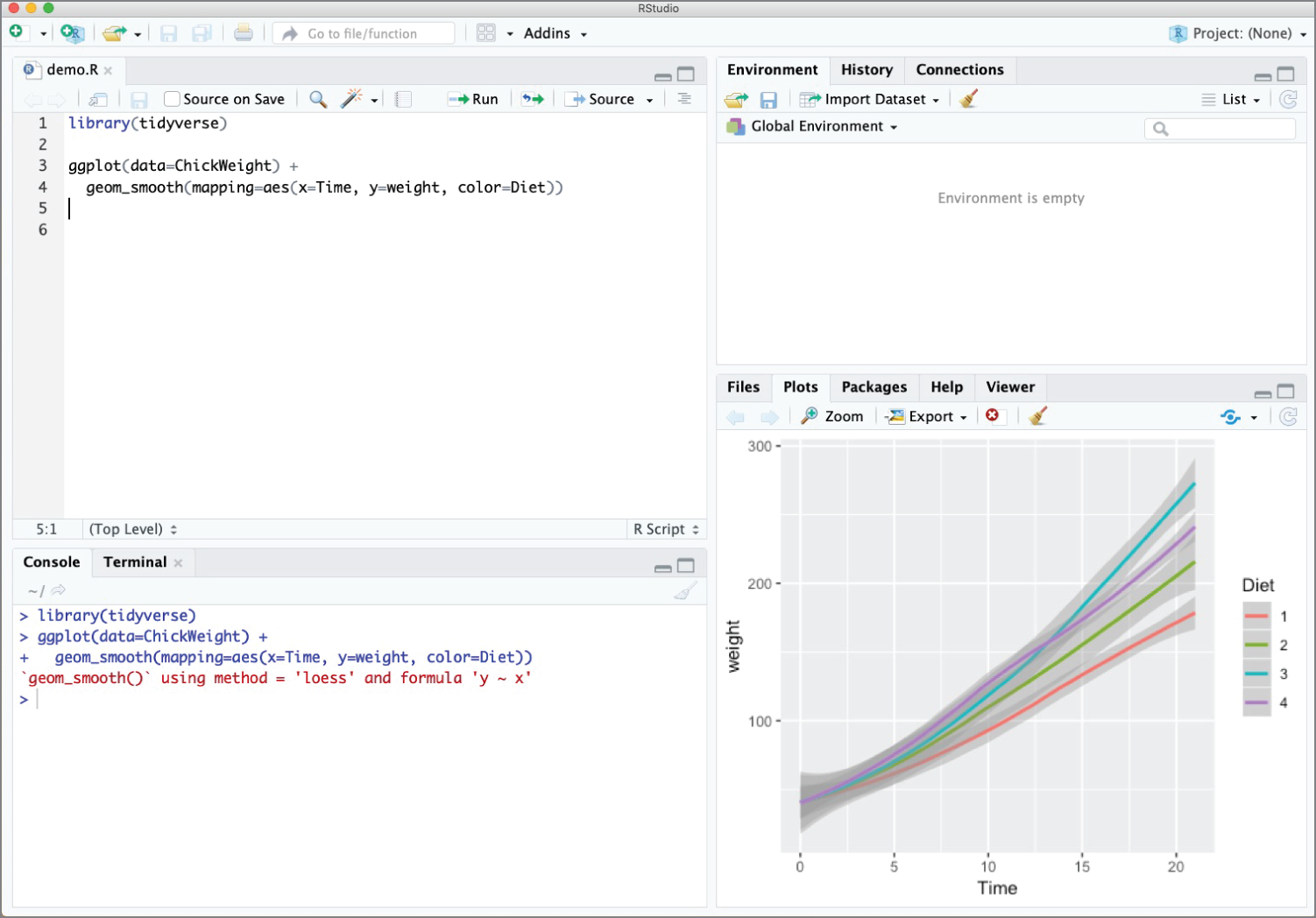

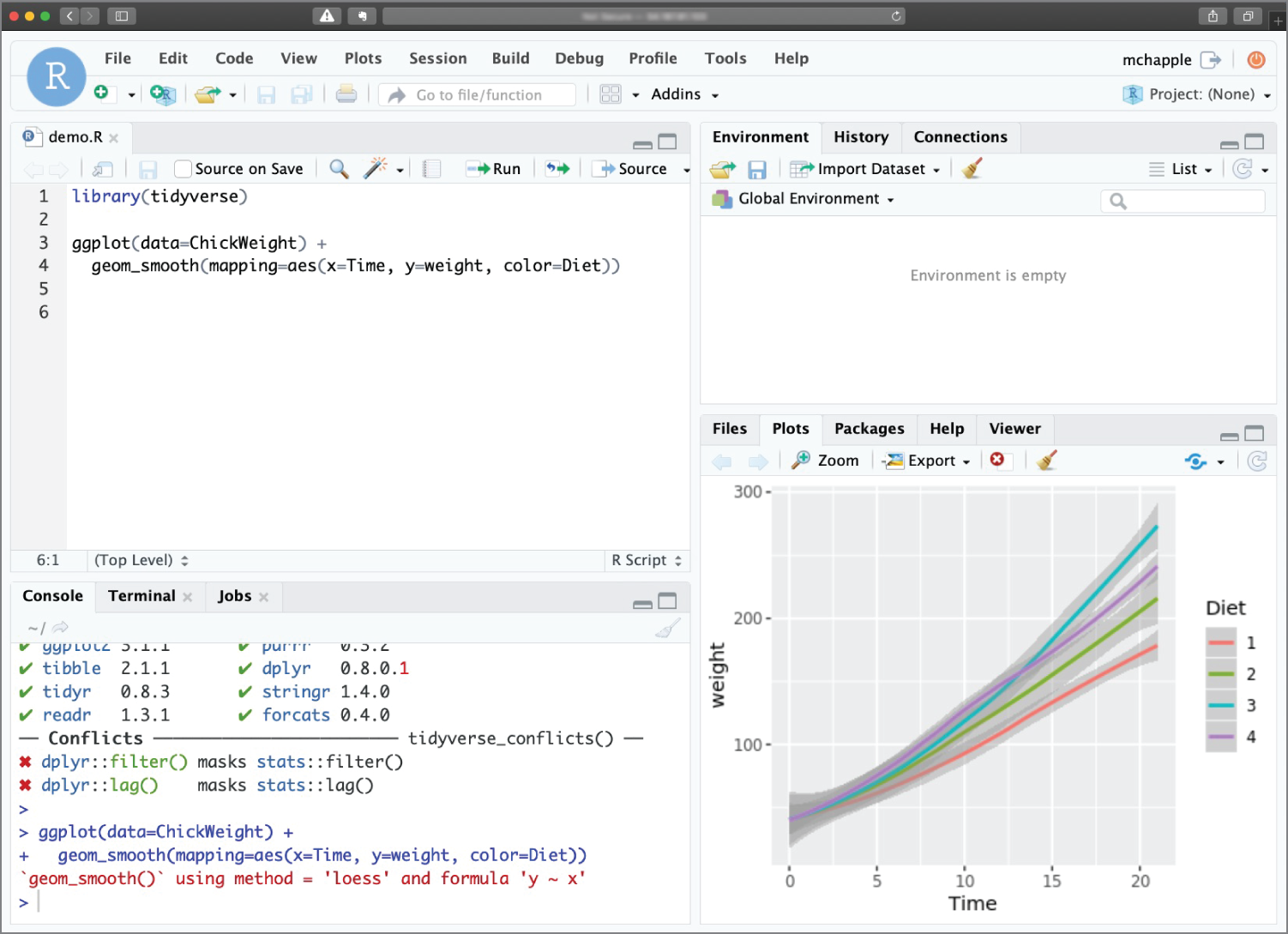

RStudio Desktop is the most commonly used version of RStudio, especially for individual programmers. It's a software package that you download and install on your Windows, Mac, or Linux system that provides you access to a well-rounded R development environment. You can see an example of the RStudio IDE in action in Figure 2.3.

Figure 2.3 RStudio Desktop offers an IDE for Windows, Mac, and Linux systems.

If you haven't already installed RStudio Desktop on your computer, go ahead and do so now. You can download the most recent version at https://www.rstudio.com/products/rstudio/download/#download.

RStudio Server

RStudio also offers a server version of the RStudio IDE. This version is ideal for teams that work together on R code and want to maintain a centralized repository. When you use the server version of RStudio, you may access the IDE through a web browser. The server then presents a windowed view to you that appears similar to the desktop environment. You can see an example of the web-based IDE in Figure 2.4.

Using RStudio Server requires building a Linux server, either on-premises or in the cloud, and then installing the RStudio Server code on that server. If your organization already uses RStudio Server, you may use that as you follow along with the examples in this book.

Exploring the RStudio Environment

Let's take a quick tour of the RStudio Desktop environment and become oriented with the different windows that you see when you open RStudio.

Figure 2.4 RStudio Server provides a web-based IDE for collaborative use.

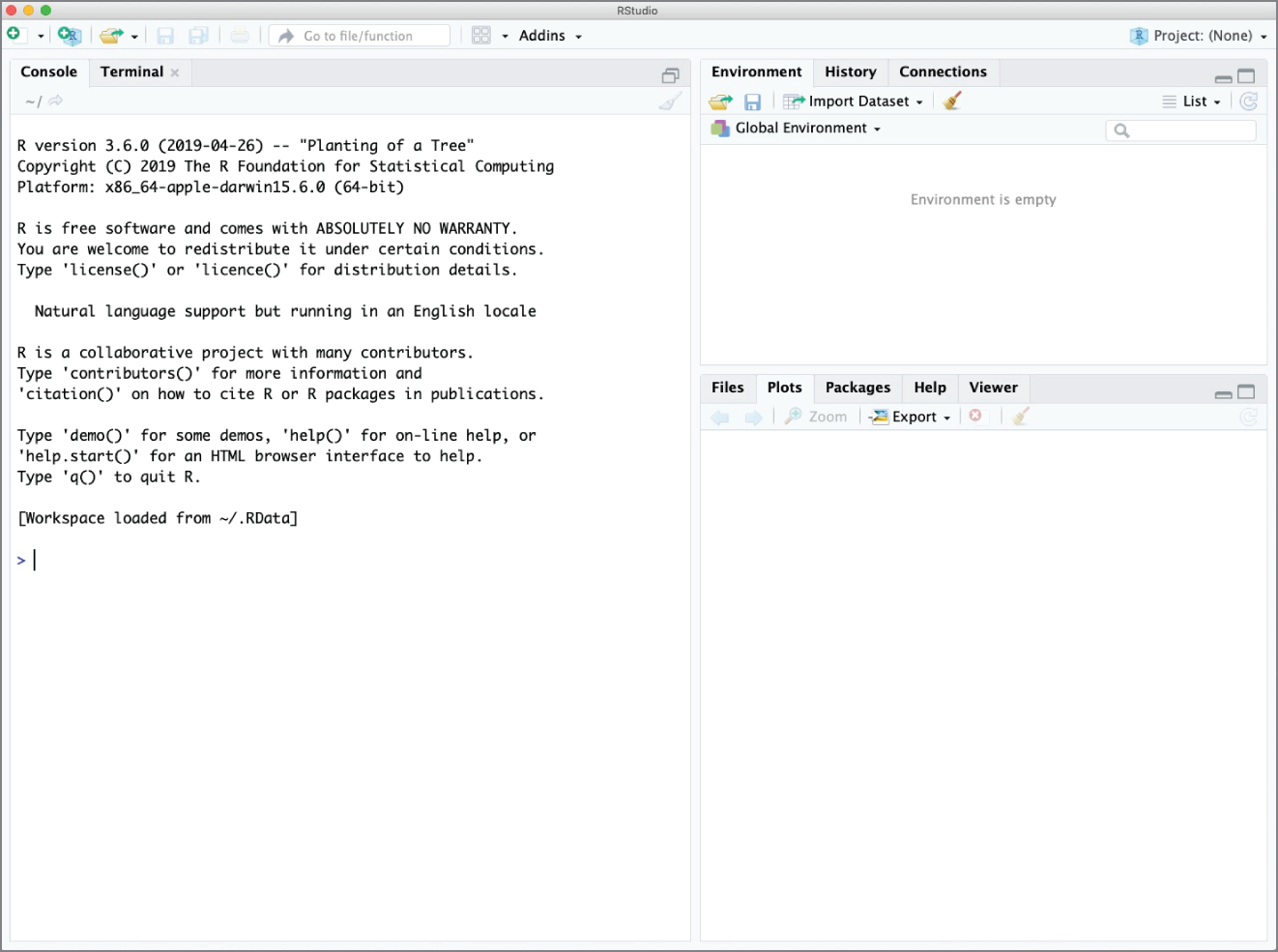

Console Pane

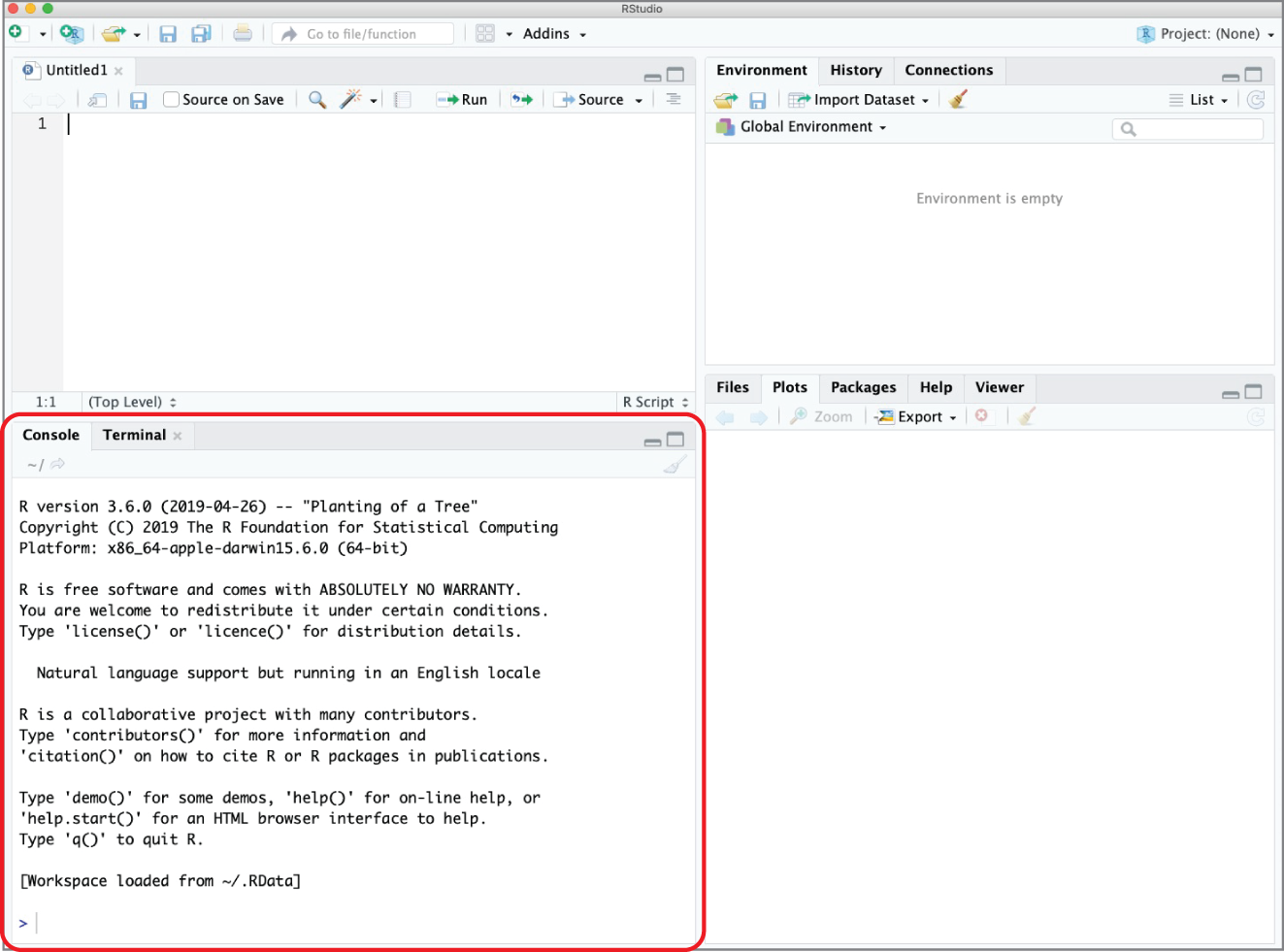

When you first open RStudio, you won't see the view shown in Figure 2.3. Instead, you'll see a view that has only three windows, shown in Figure 2.5. That's because you haven't yet opened or created an R script.

In this view, the console pane appears on the left side of the RStudio window. Once you have a script open, it appears in the lower-left corner, as shown in Figure 2.6.

Tip

The window layout shown in Figure 2.6 is the default configuration of RStudio. It is possible to change this default layout to match your own preferences. If your environment doesn't exactly match the one shown in the figure, don't worry about it—just look for the window pane titles and tabs that we discuss.

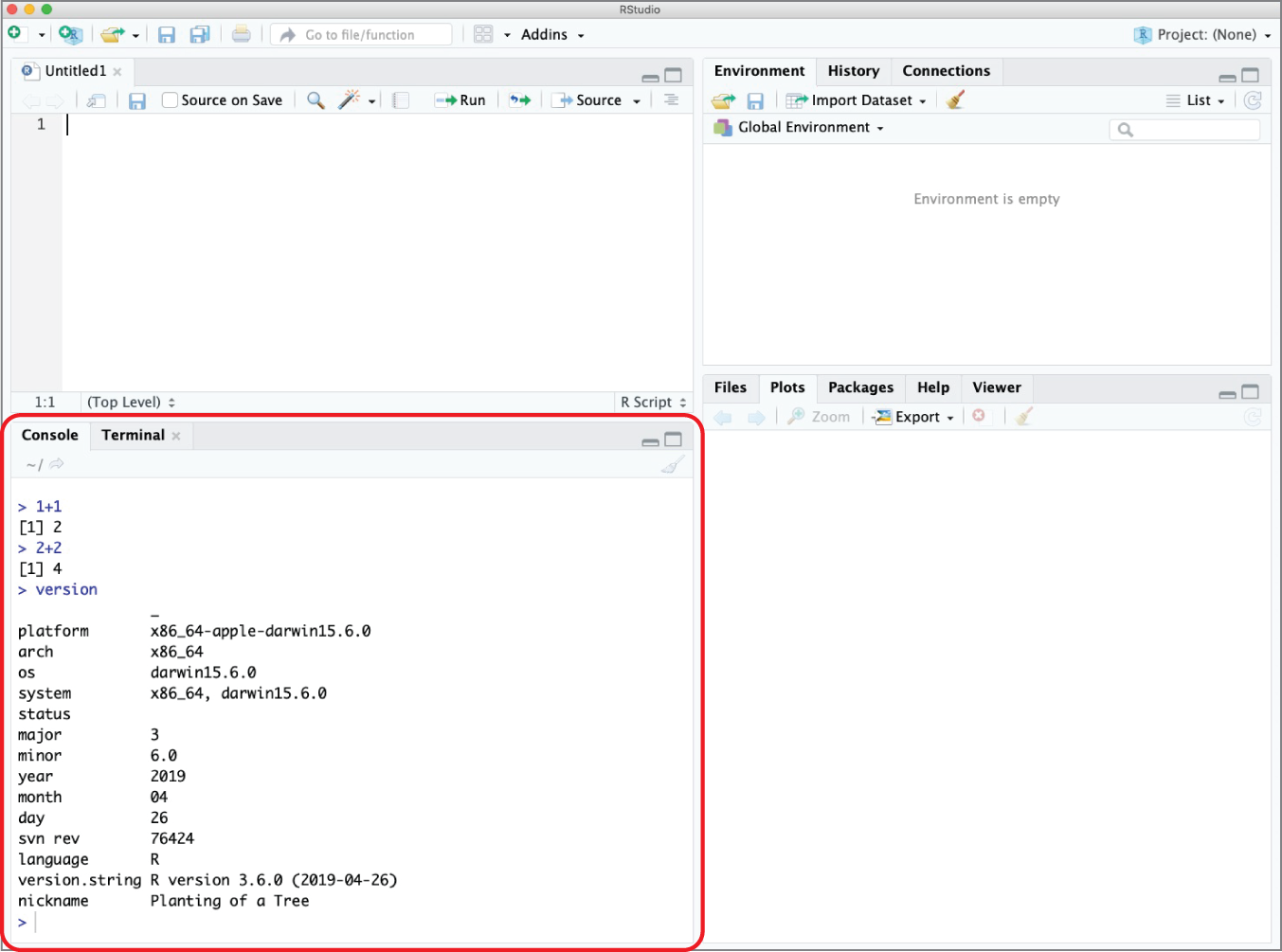

The console window allows you to interact directly with the R interpreter. You can type commands here and R will immediately execute them. For example, Figure 2.7 shows just the console pane executing several simple commands. Notice that the command entered by the user is immediately followed by an answer from the R interpreter.

Figure 2.5 RStudio Desktop without a script open

Figure 2.6 RStudio Desktop with the console pane highlighted

Figure 2.7 Console pane executing several simple R commands

Tip

The history of commands executed by a user in R is also stored in a file on the local system. This file is named .Rhistory and is stored in the current working directory.

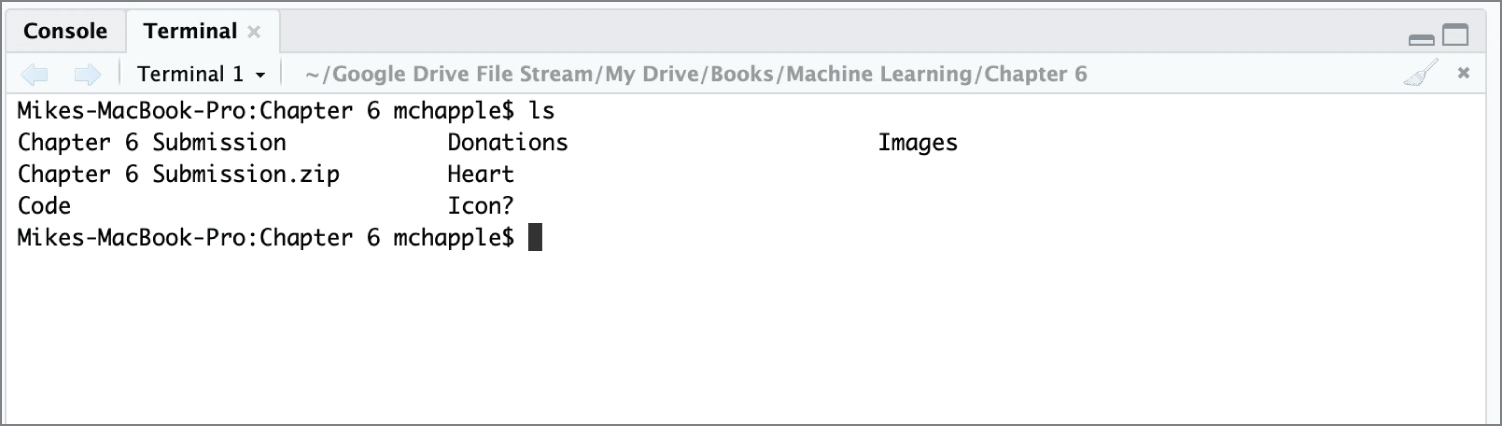

You also should observe that the console pane includes a tab titled Terminal. This tab allows you to open a terminal session directly to your operating system. It's the same as opening a shell session on a Linux system, a terminal window on a Mac, or a command prompt on a Windows system. This terminal won't interact directly with your R code and is there merely for your convenience. You can see an example of running Mac terminal commands in Figure 2.8.

Figure 2.8 Accessing the Mac terminal in RStudio

Script Pane

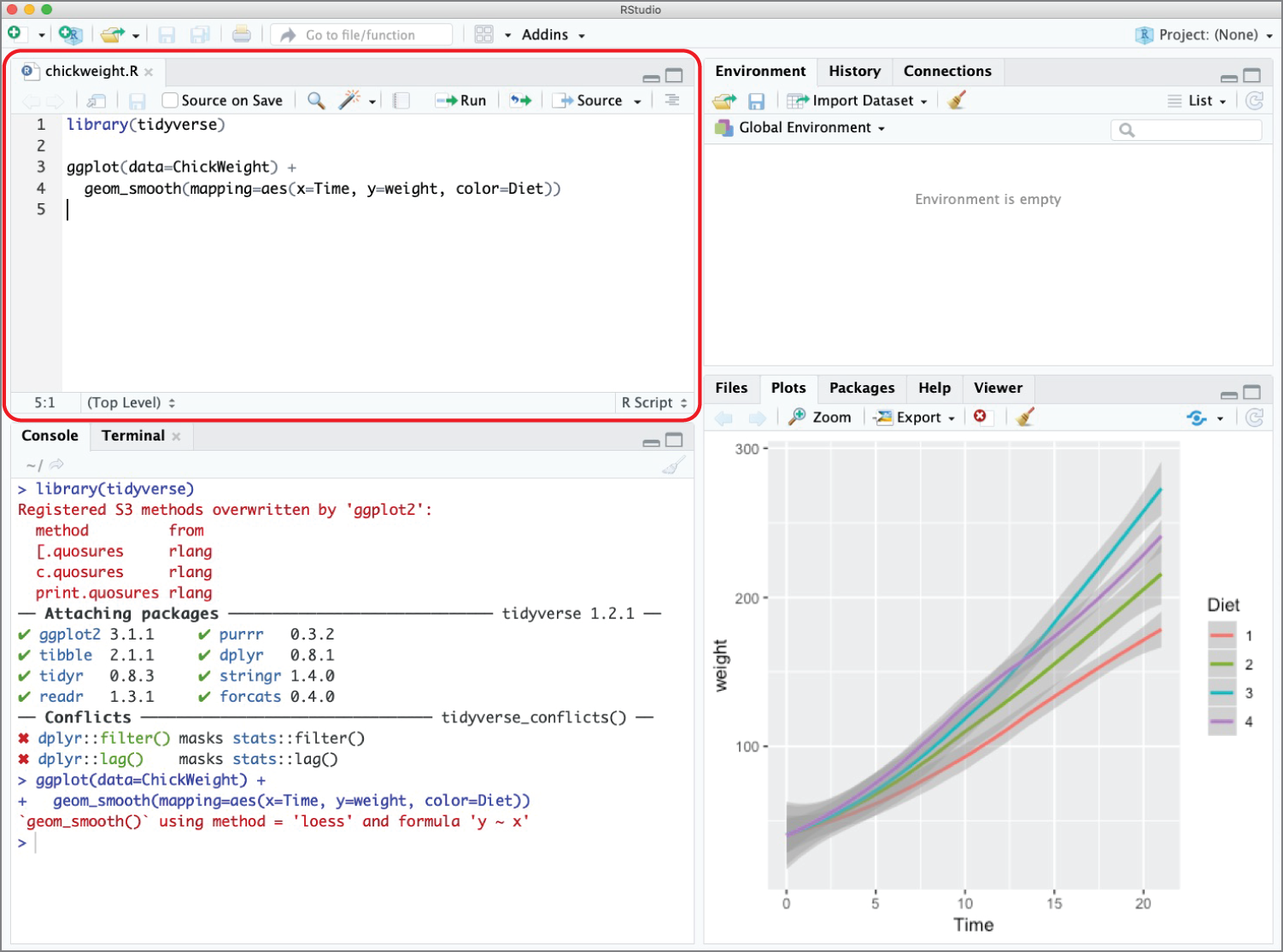

The script pane is where the magic happens! You generally won't want to execute R commands directly in the console. Instead, you'll normally write R commands in a script file that you can save to edit or reuse at a later date. An R script is simply a text file containing R commands. When you write an R script in the RStudio IDE, R will color-code different elements of your code to make it easier to read.

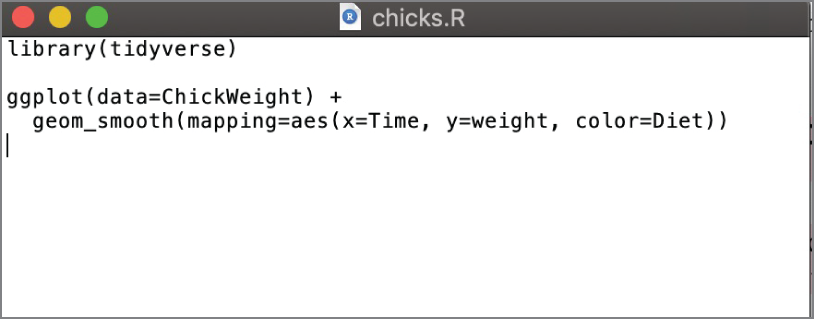

Figure 2.9 shows an example of an R script rendered inside the script pane in RStudio.

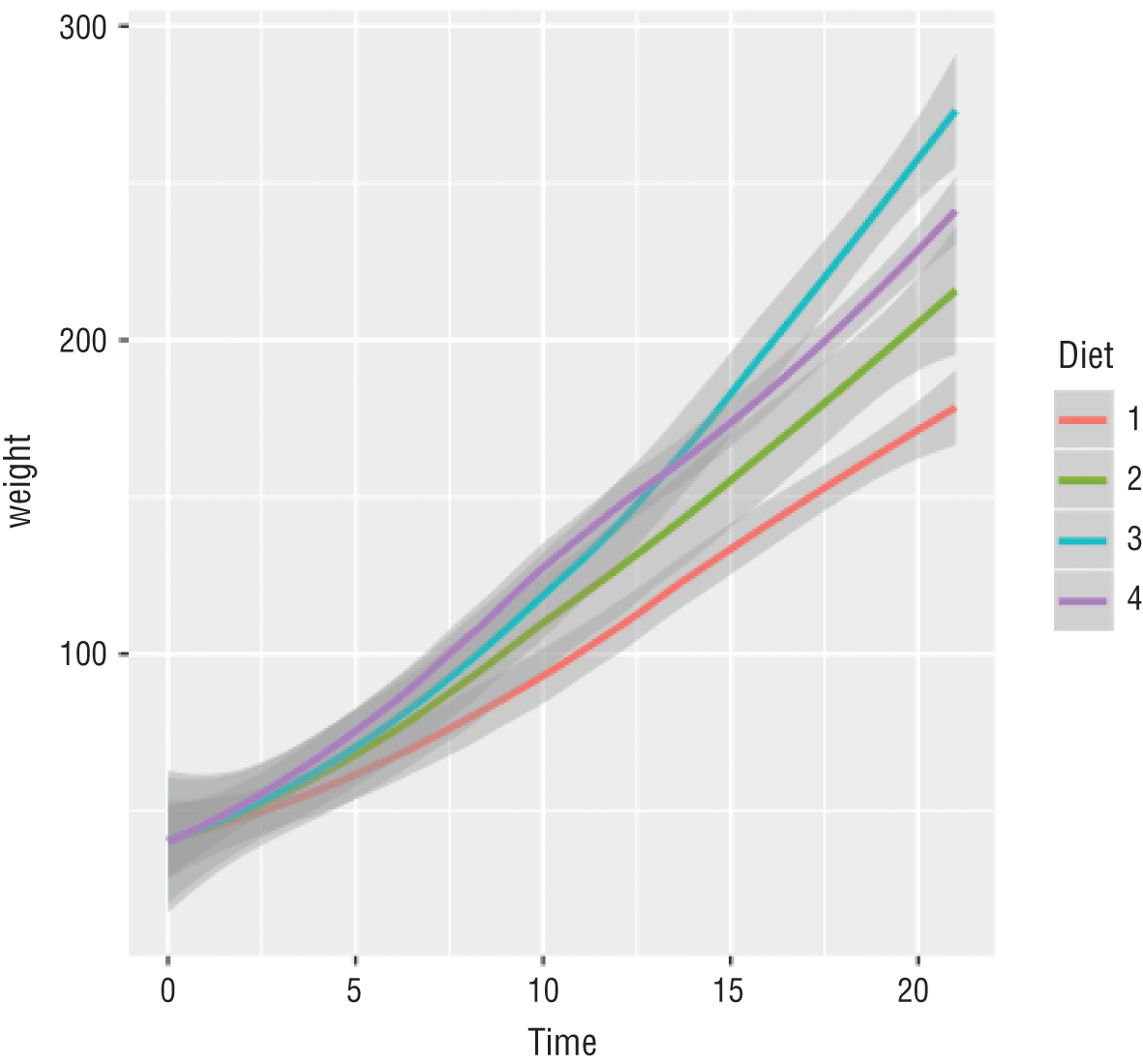

This is a simple script that loads a dataset containing information about the weights of a sample of baby chickens and creates the graph shown in Figure 2.10.

Figure 2.11 shows the same script file, opened using a simple text editor. Notice that the code is identical. The only difference is that when you open the file in RStudio, you see some color-coding to help you parse the code.

You can open an existing script in RStudio either by choosing File ⇨ Open File from the top menu or by clicking the file open icon in the taskbar. You may create a new script by choosing File ⇨ New File ⇨ R Script from the top menu or by clicking the icon of a sheet of paper with a plus symbol in the taskbar.

Figure 2.9 Chick weight script inside the RStudio IDE

Figure 2.10 Graph produced by the chick weight script

Figure 2.11 Chick weight script inside a text editor

Tip

When you are editing a script in RStudio, the name of the script will appear in red with an asterisk next to it whenever you have unsaved changes. This is just a visual reminder to save your code often! When you save your code, the asterisk will disappear, and the filename will revert to black.

Environment Pane

The environment pane allows you to take a look inside the current operating environment of R. You can see the values of variables, datasets, and other objects that are currently stored in memory. This visual insight into the operating environment of R is one of the most compelling reasons to use the RStudio IDE instead of a standard text editor to create your R scripts. Access to easily see the contents of memory is a valuable tool when developing and troubleshooting your code.

The environment pane in Figure 2.9 is empty because the R script that we used in that case did not store any data in memory. Instead, it used the ChickWeight dataset that is built into R.

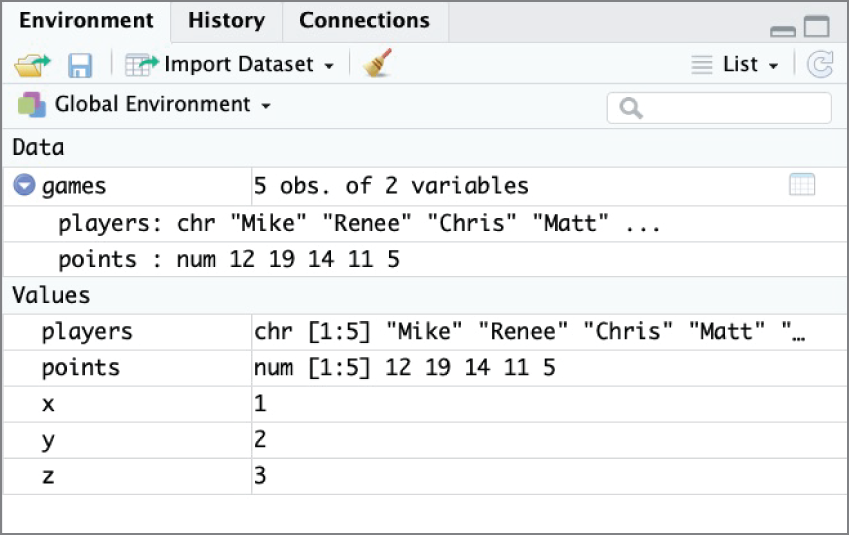

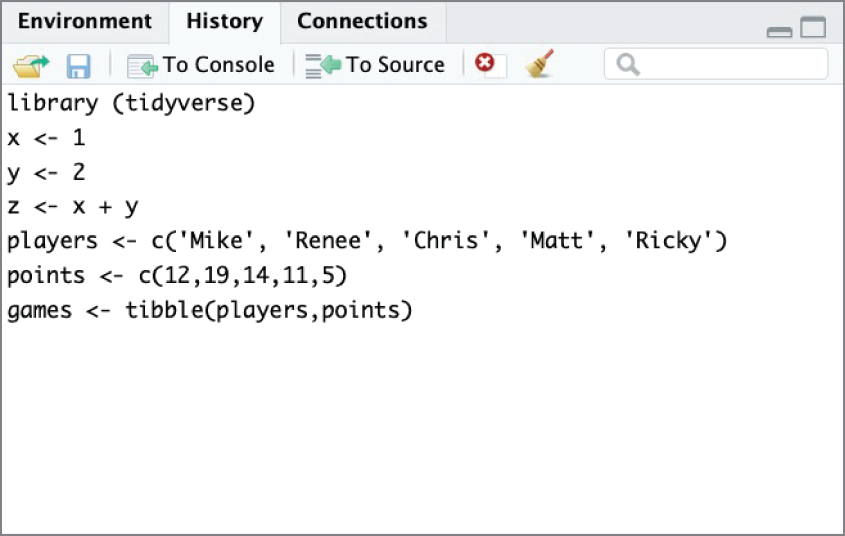

Figure 2.12 shows the RStudio environment pane populated with several variables, vectors, and a full dataset stored in an object known as a tibble. We'll discuss tibbles more in Chapter 3.

You can also use tabs in the same pane to access two other RStudio features. The History tab shows the R commands that were executed during the current session and is shown in Figure 2.13. The Connections tab is used to create and manage connections to external data sources, a technique that is beyond the scope of this book.

Figure 2.12 RStudio environment pane populated with data

Figure 2.13 RStudio History pane showing previously executed commands

Plots Pane

The final pane of the RStudio window appears in the lower-right corner of Figure 2.9. This pane defaults to the plot view and will contain any graphics that you generate in your R code. In Figure 2.9, this pane contains the plot of chick weights by diet type that was created in our sample R script. As you can see in Figure 2.5, this pane is empty when you first open RStudio and have not yet executed any commands that generate plots.

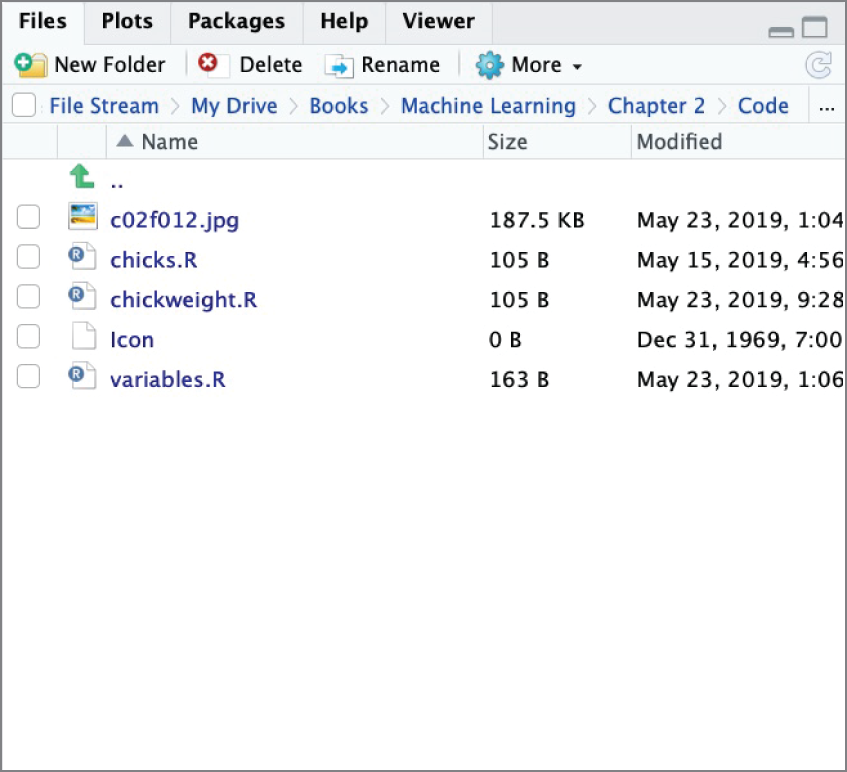

This pane also has several other tabs available. The Files tab, shown in Figure 2.14, allows you to navigate the filesystem on your device to open and manage R scripts and other files.

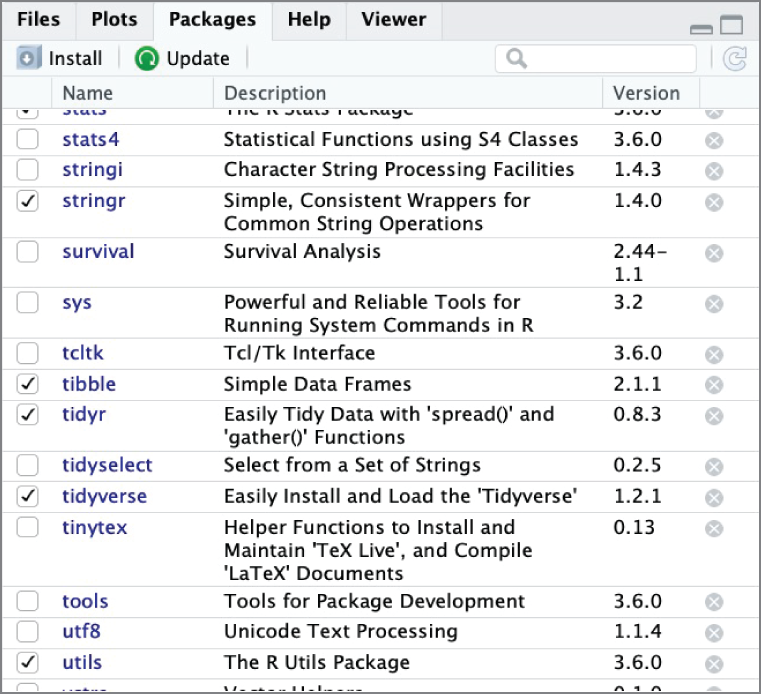

Figure 2.15 shows the Packages tab in RStudio, which allows you to install, update, and load packages. Many people prefer to perform these tasks directly in R code, but this is a convenient location to verify the packages installed on a system as well as their current version number.

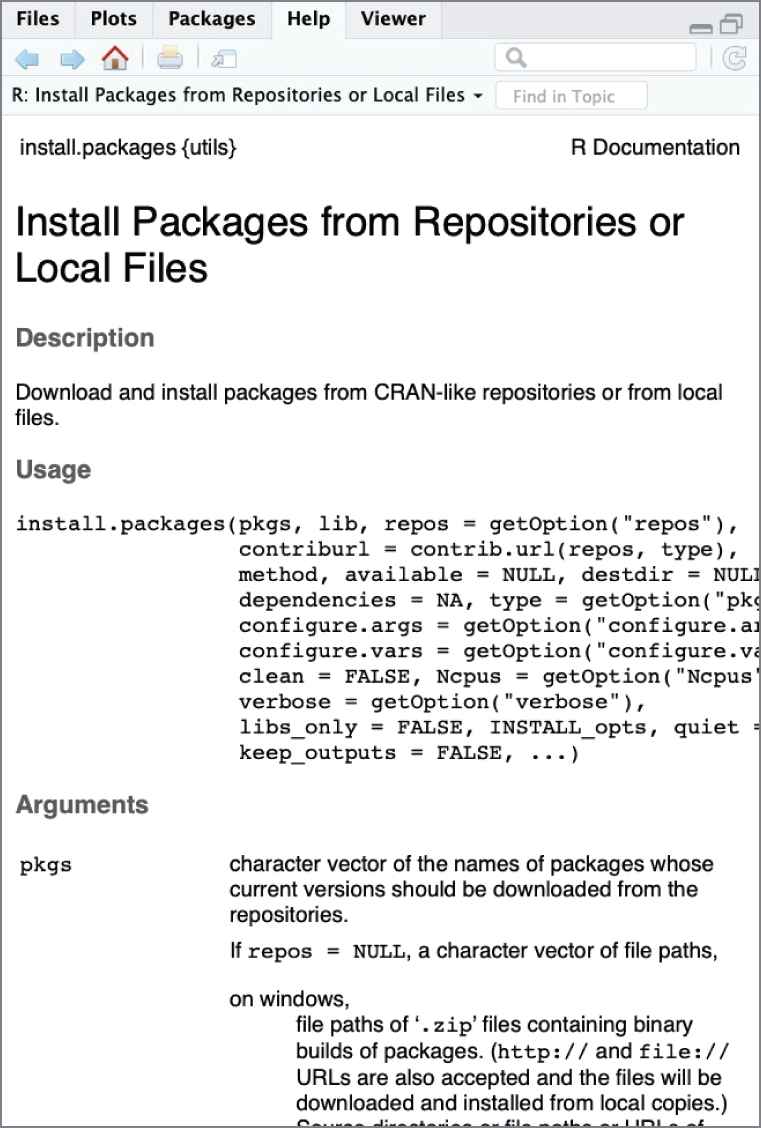

The Help tab provides convenient access to the R documentation. You can access this by searching within the Help tab or using the ? command at the console, followed by the name of the command for which you would like to see documentation. Figure 2.16 shows the result of executing the ?install.packages command at the console to view help for the install.packages() function.

The final tab, Viewer, is used for displaying local web content, such as that created using Shiny. This functionality is also beyond the scope of this book.

Figure 2.14 The Files tab in RStudio allows you to interact with your device's local filesystem.

Figure 2.15 The Packages tab in RStudio allows you to view and manage the packages installed on a system.

Figure 2.16 The Help tab in RStudio displaying documentation for the install.packages() command

R Packages

Packages are the secret sauce of the R community. They consist of collections of code created by the community and shared widely for public use. As you saw in Figure 2.1, the number of publicly available R packages has skyrocketed in recent years. These packages range from extremely popular and widely used packages, such as the tidyverse, to highly specialized packages that serve narrow niches of the R community.

In this book, we will use a variety of R packages to import and manipulate data, as well as to build machine learning models. We'll introduce you to these packages as they arise.

The CRAN Repository

The Comprehensive R Archive Network is the official repository of R packages maintained by the R community and coordinated by the R Foundation. CRAN volunteers manage the repository to ensure that all packages meet some key criteria, including that each package does the following:

- Makes a nontrivial contribution to the R community

- Is released under an open source license by individuals or organizations with the authority to do so

- Designates an individual as package maintainer and provides contact information for that individual

- Uses efficient code that minimizes file sizes and computing resource utilization

- Passes CRAN quality control checks

CRAN is the default package repository in RStudio, and all of the packages used in this book are available through CRAN.

Installing Packages

Before you can use a package in your R script, you must ensure that the package is installed on your system. Installing a package downloads the code from the repository, installs any other packages required by the code, and performs whatever steps are necessary to install the package on the system, such as compiling code and moving files.

The install.packages() command is the easiest way to install R packages on your system. For example, here is the command to install the RWeka package on your system and the corresponding output:

Code snippet

> install.packages("RWeka") also installing the dependencies ‘RWekajars’, ‘rJava’ trying URL 'https://cran.rstudio.com/bin/macosx/el-capitan/contrib/3.6/RWekajars_3.9.3-1.tgz'Content type 'application/x-gzip' length 10040528 bytes (9.6 MB)==================================================downloaded 9.6 MB trying URL 'https://cran.rstudio.com/bin/macosx/el-capitan/contrib/3.6/rJava_0.9-11.tgz'Content type 'application/x-gzip' length 745354 bytes (727 KB)==================================================downloaded 727 KB trying URL 'https://cran.rstudio.com/bin/macosx/el-capitan/contrib/3.6/RWeka_0.4-40.tgz'Content type 'application/x-gzip' length 632071 bytes (617 KB)==================================================downloaded 617 KB The downloaded binary packages are in /var/folders/f0/yd4s93v92tl2h9ck9ty20kxh000gn/T//RtmpjNb5IB/downloaded_packagesNotice that, in addition to installing the RWeka package, the command also installed the RWekajars and rJava packages. The RWeka package uses functions included in these packages, creating what is known as a dependency between the two packages. The install.packages() command resolves these dependencies by installing the two required packages before installing RWeka.

Hey, You!

You only need to install a package once on each system that you use. Therefore, most people prefer to execute the install.packages() command at the console, rather than in their R scripts. It is considered bad form to prompt the installation of packages on someone else's system!

Loading Packages

You must load a package into your R session any time you would like to use it in your code. While you only need to install a package once on a system, you must load it any time that you want to use it. Installing a package makes it available on your system, while loading it makes it available for use in the current environment.

You load a package into your R session using the library() command. For example, the following command loads the tidyverse package that we will be using throughout this book:

Code snippet

library(tidyverse)Note

If you were reading carefully, you might have noticed that the install.packages() command enclosed the package name in quotes while the library() command did not. This is the standard convention for most R users. The library() command will work whether or not you enclose the package name in quotes. The install.packages() command requires the quotation marks. Also, it is important to note that single and double quotation marks are mostly interchangeable in R.

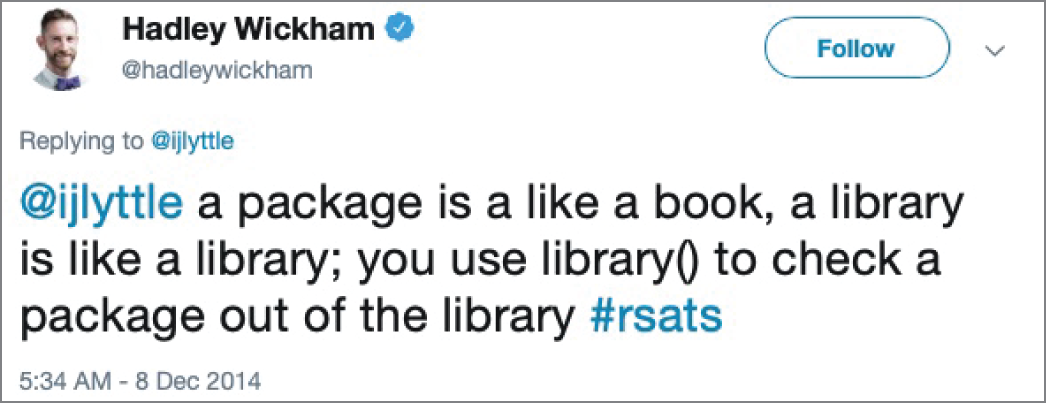

Many people who code in R use the terms package and library interchangeably. They are actually slightly different. The code bundles stored in the CRAN repository (and other locations) are known as packages. You use the install.packages() command to place the package on your system and the library() command to load it into memory. Hadley Wickham, a well-known R developer, summed this concept up well in December 2014 tweet, shown in Figure 2.17.

Package Documentation

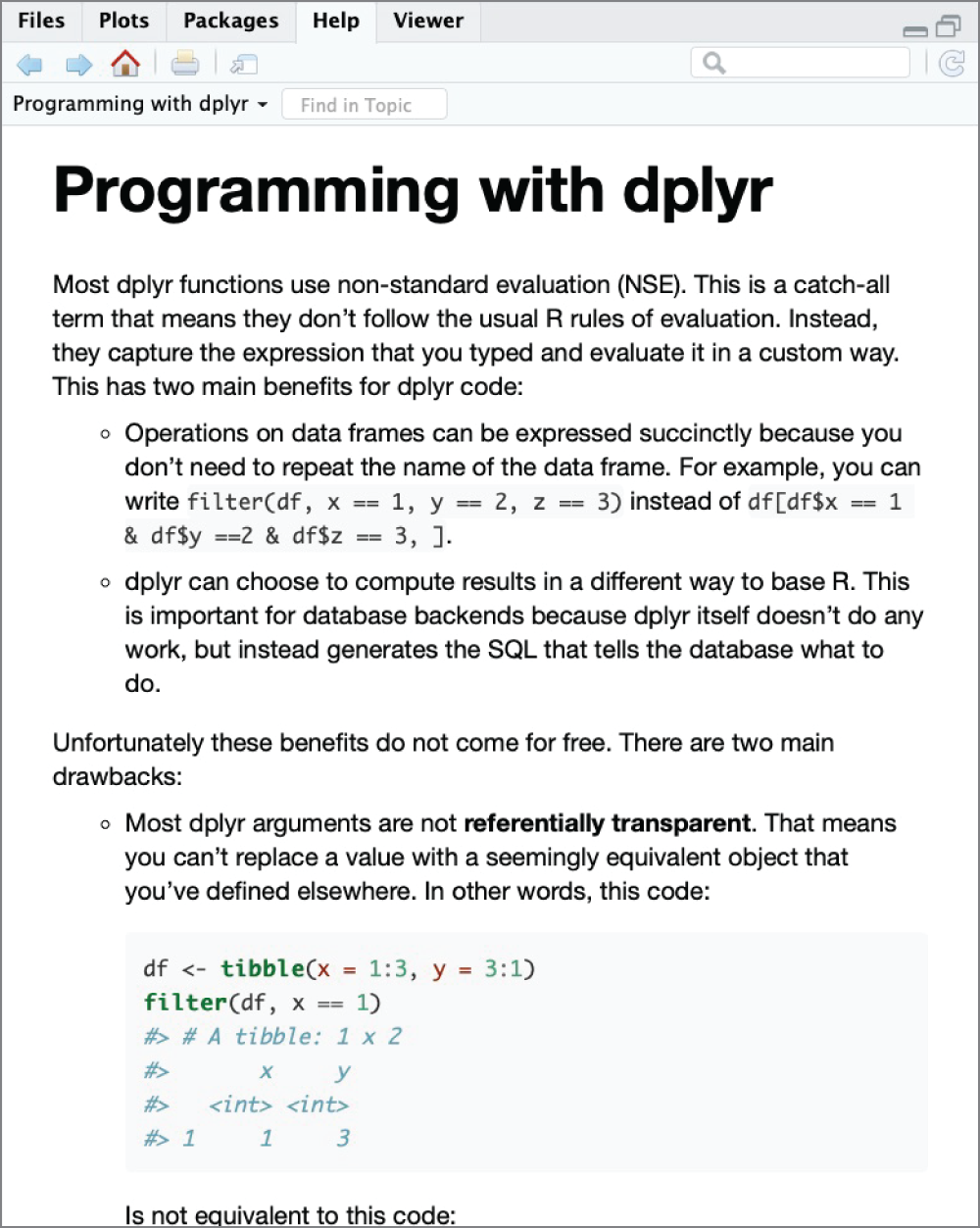

We've already discussed the use of the ? command to access the help file for a function contained within a package. Package authors also often create more detailed explanations of the use of their packages, including examples, in files called vignettes. You can access vignettes using the vignette() command. For example, the following command finds all of the vignettes associated with R's dplyr package:

Code snippet

> vignette(package = 'dplyr')Vignettes in package ‘dplyr’:

compatibility dplyr compatibility (source, html)dplyr Introduction to dplyr (source, html)programming Programming with dplyr (source, html)two-table Two-table verbs (source, html)window-functions Window functions (source, html)

Figure 2.17 Hadley Wickham on the distinction between packages and libraries

If you wanted to see the vignette called programming, you would use this command:

Code snippet

vignette(package = 'dplyr', topic = 'programming')Figure 2.18 shows the result of executing this command: a lengthy document describing how to write code using the dplyr package.

Figure 2.18 RStudio displaying the programming vignette from the dplyr package

WRITING AND RUNNING AN R SCRIPT

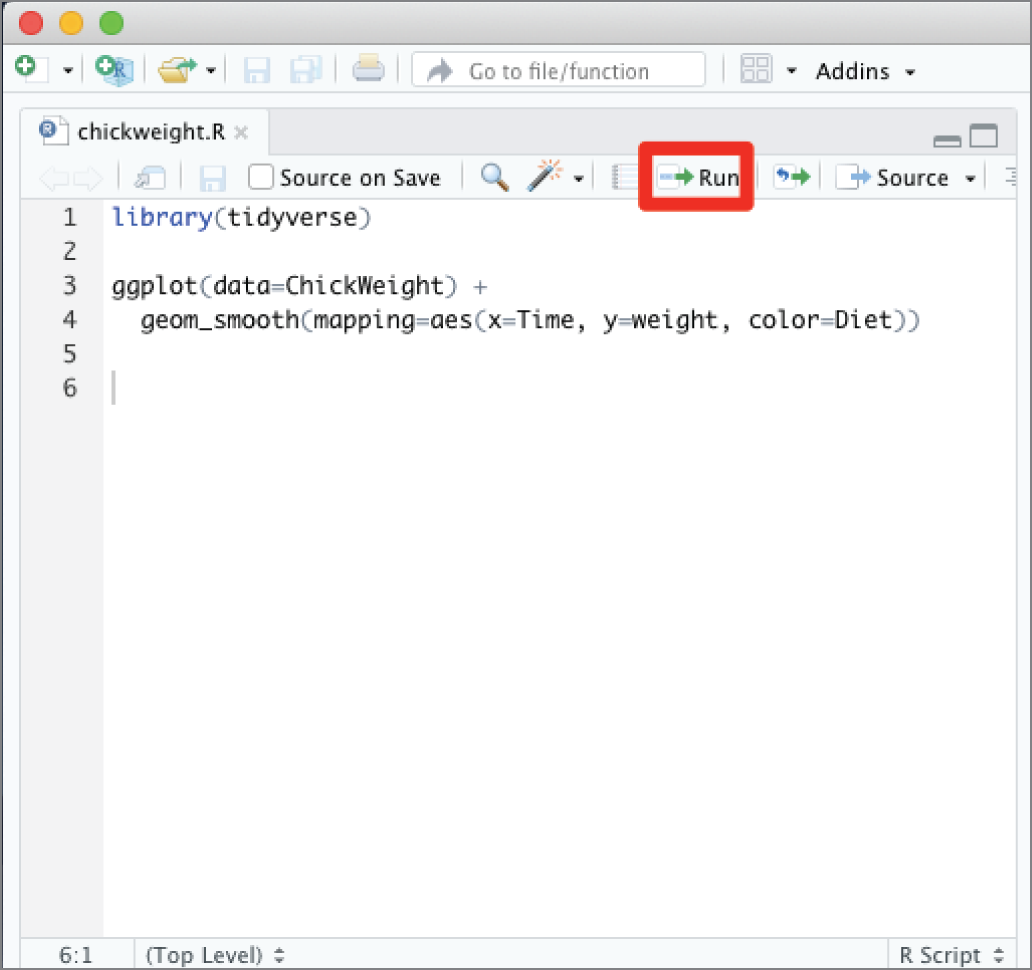

As we mentioned earlier, the most common way to work in RStudio is to write scripts containing a series of R commands that you can save and reuse at a later date. These R scripts are simply text files that you write inside RStudio's script window pane and save on your system or in a cloud storage location. Figure 2.9 showed a simple script open in RStudio.

When you want to execute your script, you have two options: the Run button and the Source button. When you click the Run button, highlighted in Figure 2.19, RStudio will execute the current section of code. If you do not have any text highlighted in your script, this will execute whatever line the cursor is currently placed on. In Figure 2.19, line 6 contains no code, so the Run button will not do anything. If you move the cursor to the first line of code, clicking the Run button would run line 1, loading the tidyverse, and then automatically advance to the next line of the script that contains code, line 3 (because line 2 is blank). Clicking the Run button a second time would run the code on lines 3 and 4 because they combine to form a single statement in R.

The Run button is a common way to execute code in R during the development and troubleshooting stages. It allows you to execute your script as you write it, monitoring the results.

Hey, You!

Many of the commands in RStudio are also accessible via keyboard shortcuts. For example, you may run the current line of code by pressing Ctrl+Enter. See https://support.rstudio.com/hc/en-us/articles/200711853-Keyboard-Shortcuts for an exhaustive list of keyboard shortcuts.

Figure 2.19 The Run button in RStudio runs the current section of code.

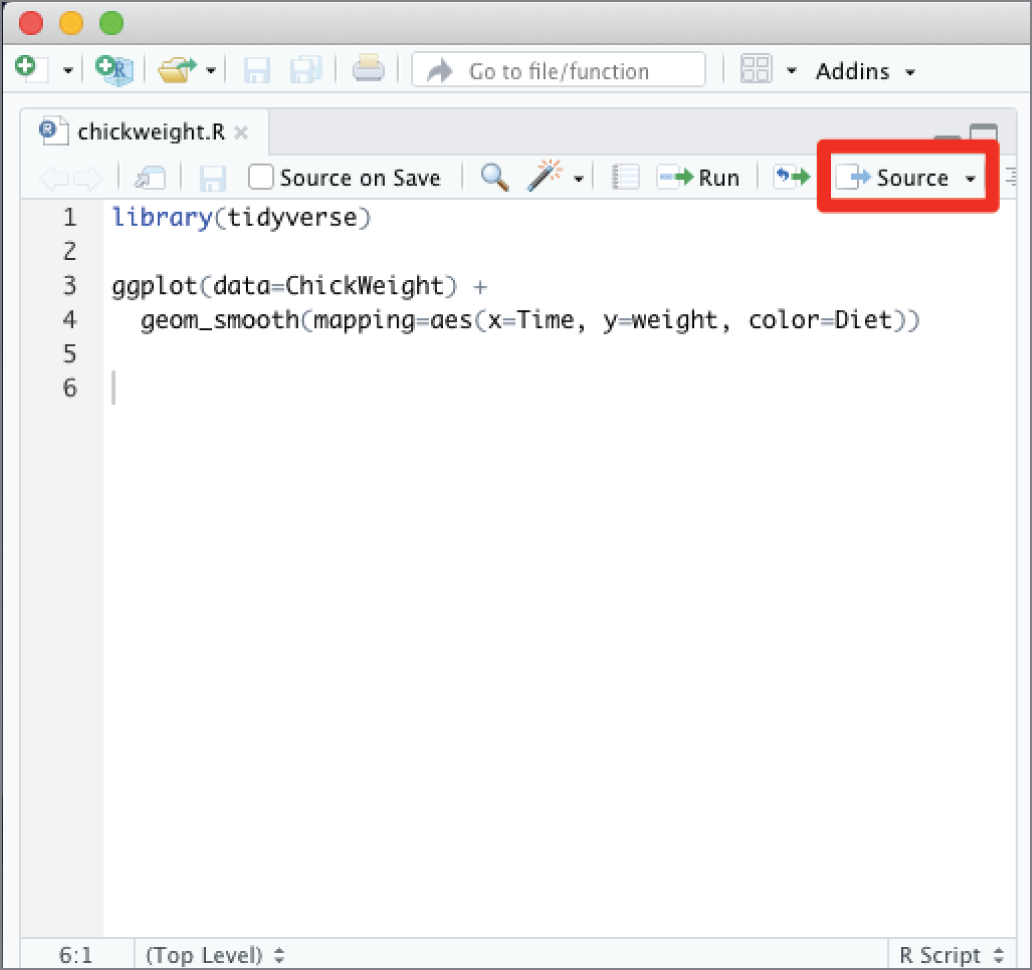

The Source button, highlighted in Figure 2.20, will save any changes that you've made to your script and then execute the entire file at once. This is a useful way to quickly run an entire script.

Tip

The Source button does not display any output to the screen by default. If you want to see the results of your script as it runs, click the small arrow to the right of the Source button and choose Source with Echo. This will cause each line of the script to appear in the console as it is executed, and plots will appear in the Plots pane.

Warning

When you execute a script using the Source button (or the Run button, for that matter), the script runs in the context of the current environment. This may use data that you created during earlier executions. If you want to run in a clean environment, be sure to clear objects from your workspace using the broom icon in the Environment pane before clicking the Source button.

Figure 2.20 The Source button in RStudio runs the entire script.

DATA TYPES IN R

As with most programming languages, all of the variables that you create in an R script have an associated data type. The data type defines the way that R stores the information contained within the variable and the range of possible values. Here are some of the more common data types in R:

- The logical data type is a simple binary variable that may have only two values:

TRUEorFALSE. It's an efficient way to store data that can take on these two values only. These data elements are also commonly referred to as flags. For example, we might have a variable in a dataset about students calledMarriedthat would be set toTRUEfor individuals who are married andFALSEfor individuals who are not. - The numeric data type stores decimal numbers, while the integer data type stores integers. If you create a variable containing a number without specifying a data type, R will store it as numeric by default. However, R can usually automatically convert between the numeric and integer data types as needed.

Tip

R also calls the numeric data type double, which is short for a double-precision floating-point number. The terms numeric and double are interchangeable.

- The character data type is used to store text strings of up to 65,535 characters each.

- The factor data type is used to store categorical values. Each possible value of a factor is known as a level. For example, you might use a factor to store the U.S. state where an individual lives. Each one of the 50 states would be a possible level of that factor.

- The ordered factor data type is a special case of the factor data type where the order of the levels is significant. For example, if we have a factor containing risk ratings of Low, Medium, and High, the order is significant because Medium is greater than Low and because High is greater than Medium. Ordered factors preserve this significance. A list of U.S. states, on the other hand, would not be stored as an ordered factor because there is no logical ordering of states.

Note

These are the most commonly used data types in R. The language does offer many other data types for special-purpose applications. You may encounter these in your machine learning projects, but we will stick to these common data types in this book.

Vectors

Vectors are a way to collect elements of the same data type in R together in a sequence. Each data element in a vector is called a component of that vector. Vectors are a convenient way to collect data elements of the same type together and keep them in a specific order.

We can use the c() function to create a new vector. For example, we might create the following two vectors, one containing names and another containing test scores:

Code snippet

> names <- c('Mike', 'Renee', 'Richard', 'Matthew', 'Christopher') > scores <- c(85, 92, 95, 97, 96)Once we have data stored in a vector, we can access individual components of that vector by placing the number of the element that we would like to retrieve in square brackets immediately following the vector name. Here's an example:

Code snippet

> names[1][1] "Mike" > names[2][1] "Renee" > scores[3][1] 95Tip

The first element of a vector in R is element 1 because R uses 1-based indexing. This is different from Python and some other programming languages that use 0-based indexing and label the first element of a vector as element 0.

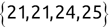

There are also functions in R that will work on an entire vector at once. For example, you can use the mean(), median(), min(), and max() functions to find the average, median, smallest, and largest elements of a numeric vector, respectively. Similarly, the sum() function adds the elements of a numeric vector.

Code snippet

> mean(scores)[1] 93 > median(scores)[1] 95 > min(scores)[1] 85 > max(scores)[1] 97 > sum(scores)[1] 465All of the components of a vector must be of the same data type. If you attempt to create a vector with varying data types, R will force them all to be the same data type. This is a process known as coercion. For example, if we try to create a mixed vector containing both character strings and numeric values:

Code snippet

> mixed <- c('Mike', 85, 'Renee', 92, 'Richard', 95, 'Matthew', 97, 'Christopher', 96)the command appears to successfully create the vector, but when we go and examine the contents of that vector:

Code snippet

> mixed [1] "Mike" "85" "Renee" "92" "Richard" "95" "Matthew" [8] "97" "Christopher" "96"we find that R has converted all of the elements to character strings. We can combine vectors of unlike types into data structures that resemble spreadsheets. The traditional way to do this in R is through a data structure known as a data frame. For example, we can combine the names and scores vectors into a data frame called testResults.

Code snippet

> testResults <- data.frame(names, scores) > testResults names scores1 Mike 852 Renee 923 Richard 954 Matthew 975 Christopher 96You may access the vectors stored within a data frame using the $ operator. For example, if you wanted to calculate the mean test score, you could use the following code:

Code snippet

> mean(testResults$scores)[1] 93In Chapter 3, we will discuss how the tidyverse package uses an enhanced version of a data frame called a tibble. We will then use tibbles as our primary data structure in the remainder of this book.

Testing Data Types

When we use objects in R, we may want to learn more about their data type, either by directly asking a question about the object's type or by testing it programmatically. The R language includes functions designed to assist with these tasks.

The class() function returns the data type of an object. For example, examine the following sample code:

Code snippet

> x <- TRUE> y <- 1> z <- 'Mike Chapple' > class(x)[1] "logical" > class(y)[1] "numeric" > class(z)[1] "character"Notice that when we assign the values of x, y, and z, we do not need to explicitly assign the data types. When you perform the assignments, R interprets the arguments you provide and makes assumptions about the correct data type. In the next section, we'll talk about how you can use the as.x() functions in R to explicitly convert data types.

If you'd like to create a factor data type in R, you can use the factor() function to convert a vector of character strings into a factor. For example, the following code creates a character vector, tests the class, converts it to a factor, and retests the class:

Code snippet

> productCategories <- c('fruit', 'vegetable', 'fruit', 'fruit', 'dry goods', 'dry goods', 'vegetable') > class(productCategories)[1] "character" > productCategories <- factor(productCategories) > class(productCategories)[1] "factor"We can also test the length of an object using the length() function. This function returns the number of components of that object. If the object is a factor or vector, the length() function returns the number of elements in that factor or vector. If the object is a single numeric, character, or logical element, the length() function returns the value 1. For example, look at this code:

Code snippet

> length(x)[1] 1 > length(y)[1] 1 > length(z)[1] 1 > length(productCategories)[1] 7R also includes a set of “is” functions that are designed to test whether an object is of a specific data type and return TRUE if it is and FALSE if it is not. The “is” functions include the following:

is.logical()is.numeric()is.integer()is.character()is.factor()

To use these functions, simply select the appropriate one and pass the object you want to test as an argument. For example, examine the following results using the same data elements x, y, and z that we created earlier in this section:

Code snippet

> is.numeric(x)[1] FALSE > is.character(x)[1] FALSE > is.integer(x)[1] FALSE > is.logical(x)[1] TRUE > is.numeric(y)[1] TRUE > is.integer(y)[1] FALSE > is.character(z)[1] TRUEDo those results make sense to you? If you look back at the code that created those variables, x is the logical value TRUE, so only the is.logical() function returned a value of TRUE, while the other test functions returned FALSE.

The y variable contained an integer value, so the is.integer() function returned TRUE, while the other functions returned FALSE. It is significant to note here that the is.numeric() function also returned FALSE, which may seem counterintuitive given the name of the function. When we created the y variable using the code:

Code snippet

> y <- 1R assumed that we wanted to create a numeric variable, the default type for values consisting of digits. If we wanted to explicitly create an integer, we would need to add the L suffix to the number during creation. Examine this code:

Code snippet

> yint <- 1L > is.integer(yint)[1] TRUE > is.numeric(yint)[1] TRUEHere we see yet another apparent inconsistency. Both the is.numeric() and is.integer() functions returned values of TRUE in this case. This is a nuance of the is.numeric() function. Instead of returning TRUE only when the object tested is of the numeric class, it returns TRUE if it is possible to convert the data contained in the object to the numeric class. We can verify with the class function that y is a numeric data type while yint is an integer.

Code snippet

> class(y)[1] "numeric" > class(yint)[1] "integer"Alternatively, we could also convert the numeric variable we created initially to an integer value using the as.integer() function, which we will introduce in the next section.

The “is” functions also work on vector objects, returning values based upon the data type of the objects contained in the vector. For example, we can test the names and scores vectors that we created in the previous section.

Code snippet

> is.character(names)[1] TRUE > is.numeric(names)[1] FALSE > is.character(scores)[1] FALSE > is.numeric(scores)[1] TRUE > is.integer(scores)[1] FALSEConverting Data Types

You may find yourself in a situation where you need to convert data from one type to another. R provides the “as” functions to perform these conversions. Some of the more commonly used “as” functions in R are the following:

as.logical()as.numeric()as.integer()as.character()as.factor()

Each of these functions takes an object or vector as an argument and attempts to convert it from its existing data type to the data type contained within the function name. Of course, this conversion isn't always possible. If you have a numeric data object containing the value 1.5, R can easily convert this to the “1.5” character string. There is not, however, any reasonable way to convert the character string “apple” into an integer value. Here are a few examples of the “as” functions at work:

Code snippet

> as.numeric("1.5")[1] 1.5 > as.integer("1.5")[1] 1 > as.character(3.14159)[1] "3.14159" > as.integer("apple")[1] NAWarning message:NAs introduced by coercion > as.logical(1)[1] TRUE > as.logical(0)[1] FALSE > as.logical("true")[1] TRUE > as.logical("apple")[1] NAMissing Values

Missing values appear in many datasets because data was not collected, is unknown, or is not relevant. When missing values occur, it's important to distinguish them from blank or zero values. For example, if I don't yet know the price of an item that will be sold in my store, the price is missing. It is definitely not zero, or I would be giving the product away for free!

R uses the special constant value NA to represent missing values in a dataset. You may assign the NA value to any other type of R data element. You can use the is.na() function in R to test whether an object contains the NA value.

Just as the NA value is not the same as a zero or blank value, it's also important to distinguish it from the “NA” character string. We once worked with a dataset that contained two-letter country codes in a field and were puzzled that some records in the dataset were coming up with missing values for the country field, when we did not expect such an occurrence. It turns out that the dataset was being imported from a text file that did not use quotes around the country code and there were several records in the dataset covering the country of Namibia, which, you guessed it, has the country code "NA". When the text file was read into R, it interpreted the string NA (without quotes) as a missing value, converting it to the constant NA instead of the country code "NA".

Note

If you're familiar with the Structured Query Language (SQL), it might be helpful to think of the NA value in R as equivalent to the NULL value in SQL

EXERCISES

- Visit the

r-project.orgwebsite. Download and install the current version of R for your computer. - Visit the

rstudio.comwebsite. Download and install the current version of RStudio for your computer. - Explore the RStudio environment, as explained in this chapter. Create a file called

chicken.Rthat contains the following R script:Code snippet

install.packages("tidyverse") library(tidyverse) ggplot(data=ChickWeight) + geom_smooth(mapping=aes(x=Time, y=weight, color=Diet))Execute your code. It should produce a graph of chicken weights as output.

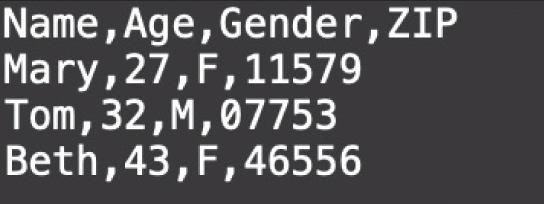

Chapter 3: Managing Data

In Chapter 1, we discussed some of the foundational principles behind machine learning. We followed that discussion with an introduction to both the R programming language and the RStudio development environment in Chapter 2. In this chapter, we explain how to use R to manage our data prior to modeling. The quality of a machine learning model is only as good as the data used to build it. Quite often, this data is not easily accessible, is in the wrong format, or is hard to understand. As a result, it is critically important that prior to building a model, we spend as much time as needed to collect the data we need, explore and understand the data we have, and prepare it so that it is useful for the selected machine learning approach. Typically,  percent of the time we spend in machine learning is, or should be, spent managing data.

percent of the time we spend in machine learning is, or should be, spent managing data.

By the end of this chapter, you will have learned the following:

- What the tidyverse is and how to use it to manage data in R

- How to collect data using R and some of the key things to consider when collecting data

- Different approaches to describe and visualize data in R

- How to clean, transform, and reduce data to make it more useful for the machine learning process

THE TIDYVERSE

The tidyverse is a collection of R packages designed to facilitate the entire analytics process by offering a standardized format for exchanging data between packages. It includes packages designed to import, manipulate, visualize, and model data with a series of functions that easily work across different tidyverse packages.

The following are the major packages that make up the tidyverse:

readrfor importing data into R from a variety of file formatstibblefor storing data in a standardized formatdplyrfor manipulating dataggplot2for visualizing datatidyrfor transforming data into “tidy” formpurrrfor functional programmingstringrfor manipulating stringslubridatefor manipulating dates and times

These are the developer-facing packages that we'll use from the tidyverse, but these packages depend on dozens of other foundational packages to do their work. Fortunately, you can easily install all of the tidyverse packages with a single command:

Code snippet

install.packages("tidyverse")Similarly, you can load the entire tidyverse using this command:

Code snippet

library(tidyverse)In the remainder of this chapter and the rest of this text, we will use several tidyverse packages and functions. As we do so, we will endeavor to provide a brief explanation of what each function does and how it is used. Please note that this book is not intended to be a tutorial on the R programming language or the tidyverse. Rather, the objective is to explain and demonstrate machine learning concepts using those tools. For readers who are interested in a more in-depth introduction to the R programming language and the tidyverse, we recommend the book R for Data Science by Hadley Wickham and Garrett Grolemund.

DATA COLLECTION