This article was sponsored by import.io. Thank you for supporting the sponsors who make SitePoint possible!

The web is full of interesting and useful information. Some of the most successful web applications do nothing more than organize that information in a creative way that’s easy to digest by their users — ever heard of a little company called Google? However, organizing data from the web can be a difficult and time consuming process, and many projects never launch because of this. Now, import.io is solving this problem, meaning web data is finally easy to collect, organize, and use to your advantage.

What is import.io?

import.io allows you to collect data from across the web, and then access and manipulate that data through a simple user interface. As highlighted in their introductory video, the process is very quick, and requires absolutely no coding knowledge to complete. After collecting your data, you can view it and apply edits as needed, integrate it into your application as an API (import.io has great documentation with instructions on how to do this in a wide array of languages), or download it in a variety of formats.

Using import.io

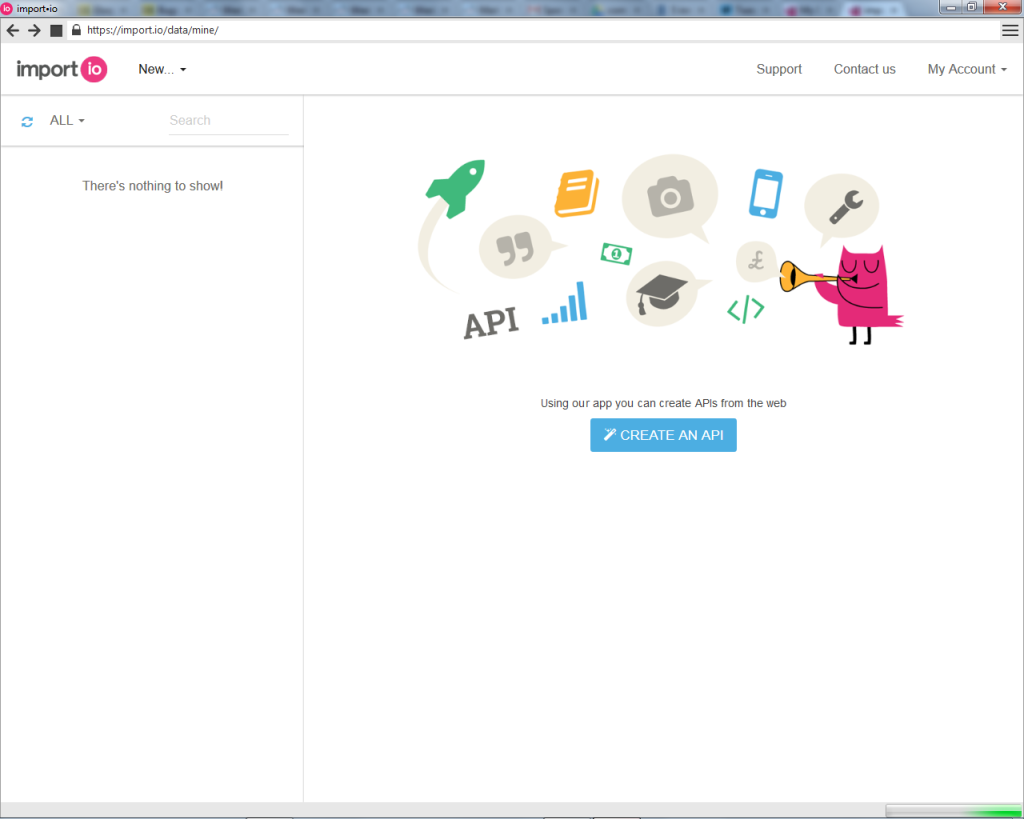

Most applications that involve complicated and computation-heavy processes have equally complicated user interfaces. I was surprised to find how easy import.io was to navigate and use.

After signing up, I downloaded their desktop application (which you will need to collect data).

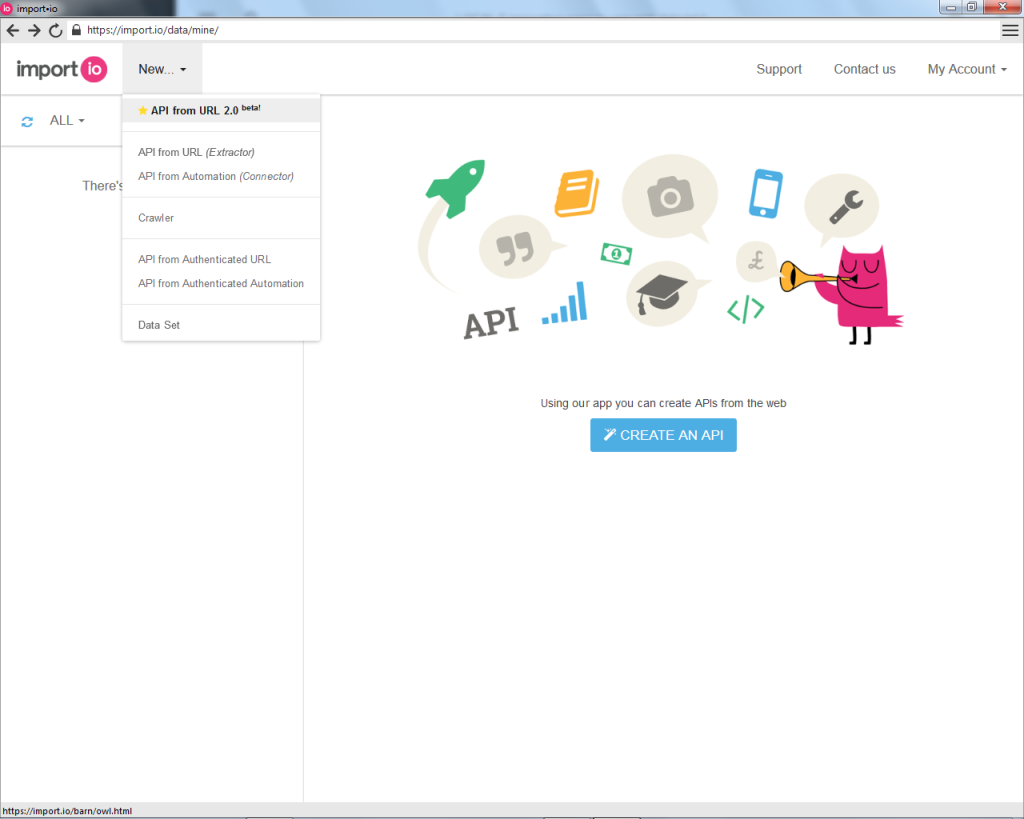

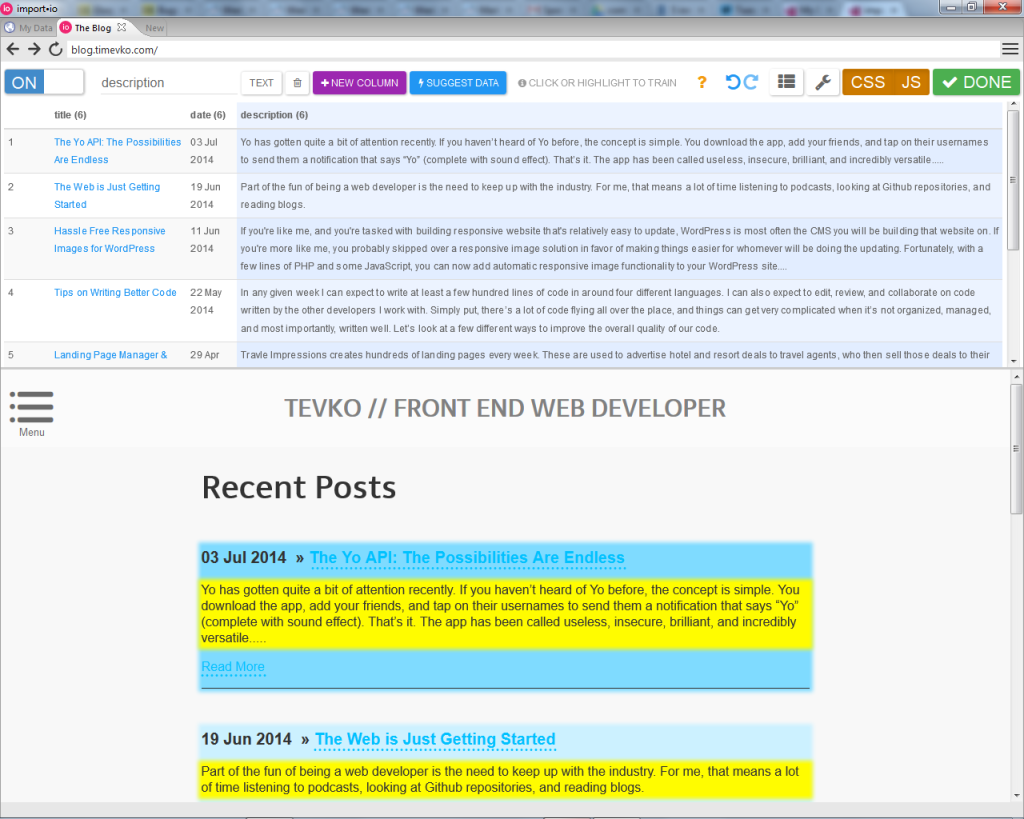

For my first data collection project, I decided to create an API from a URL, in the form of a collection of posts from my personal blog.

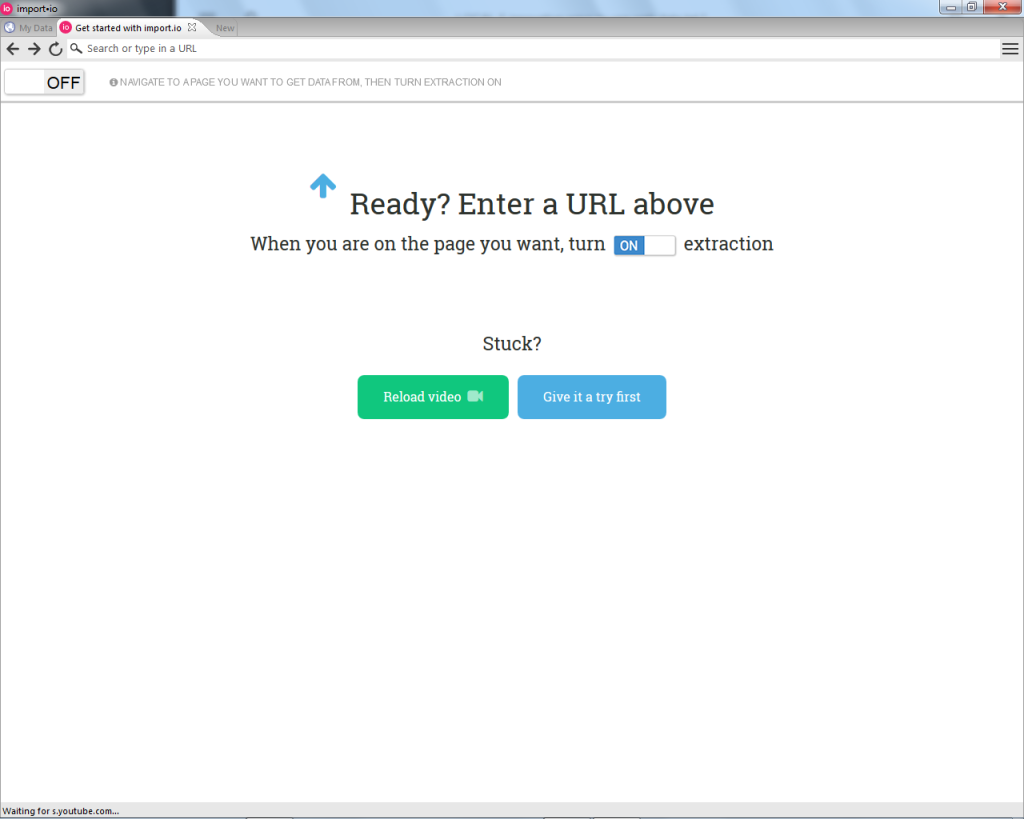

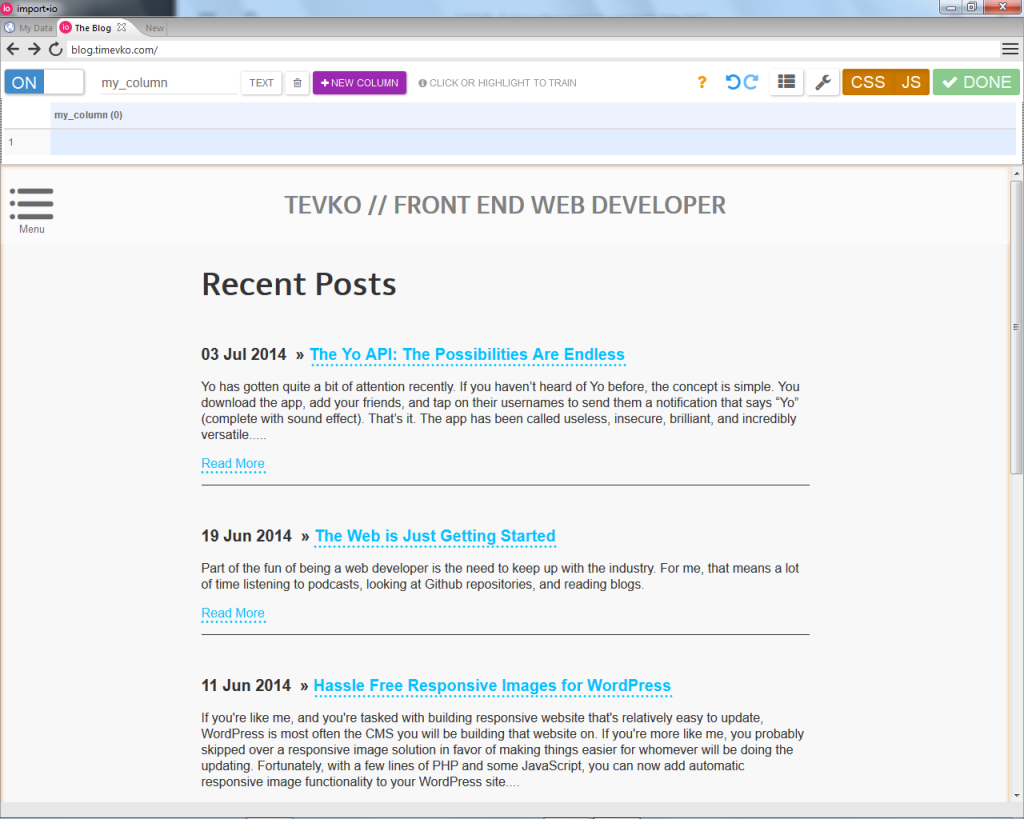

I then entered my blog address into the URL bar, and then turned extraction on to begin building my API.

As a side note, another great feature offered by import.io is the ability to toggle JavaScript support on or off, in case you need to collect data from a JavaScript run application. From there, creating the data table was as easy as navigating a simple point-and-click interface, in which I told the application how many rows I expected to be in the table (one row or multiple), and clicked on the desired data as I named the column it was to be placed in. The impressive part was when the application correctly predicted where the rest of the data I needed was located, and I didn’t have to specify where the next post title, date, or description would be. import.io doesn’t just collect data, it also predicts where your data is going to be, and adjusts itself when the location of the data is changed on the page.

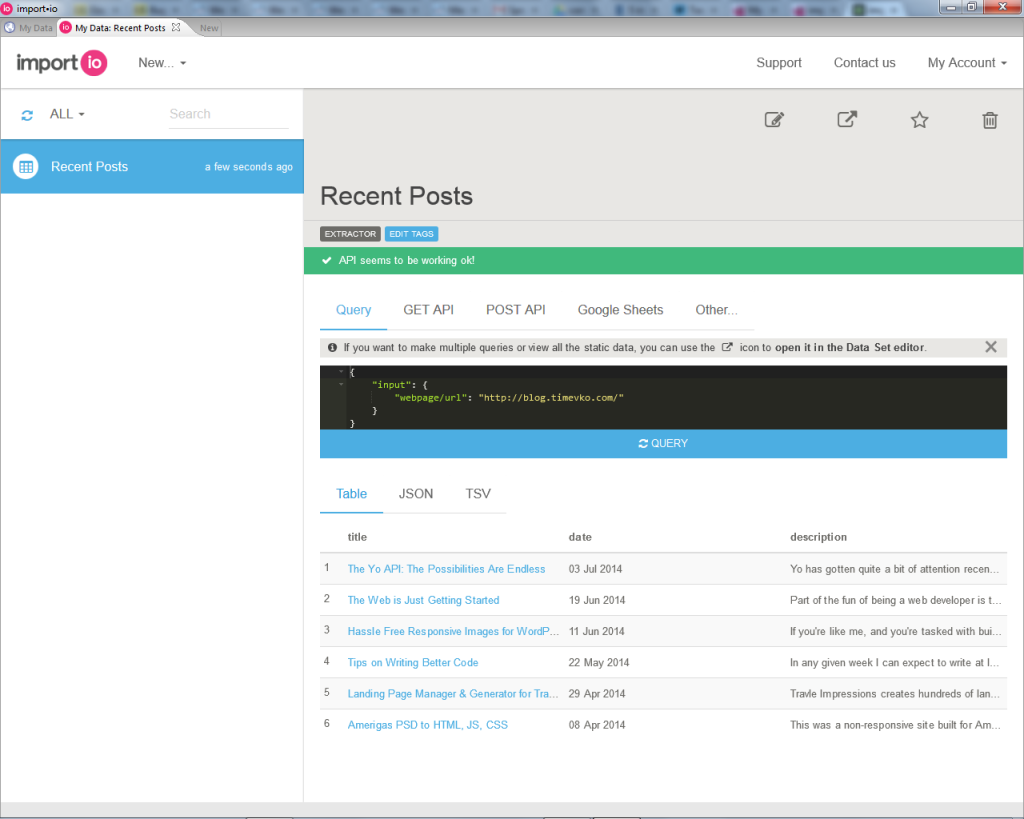

After all of the data was in place, I clicked “Done” and was brought back to the “My Data” section of the application, where I could edit, implement, and export my data as needed. You can export the data in a number of ways, including as JSON, TSV, or using Google Sheets.

Here’s a sample of the automatically generated JSON code from my Recent Posts data collection:

{

"title": "http://blog.timevko.com/2014/07/03/yo-possibilities-endless",

"title/_text": "The Yo API: The Possibilities Are Endless",

"description": "Yo has gotten quite a bit of attention recently. If you haven’t heard of Yo before, the concept is simple. You download the app, add your friends, and tap on their usernames to send them a notification that says “Yo” (complete with sound effect). That’s it. The app has been called useless, insecure, brilliant, and incredibly versatile.....",

"date": "03 Jul 2014",

"title/_source": "/2014/07/03/yo-possibilities-endless"

}

Using import.io to create this quick data collection only tok me about five minutes, and it was perfectly accurate. The best part? I didn’t pay a dime. import.io is free to use for life, and has an option for enterprise solutions as well.

So now we know how easy it is to collect and use data from all over the web, what are some other interesting things that we can do with it?

1. Build an app that finds diners open near you

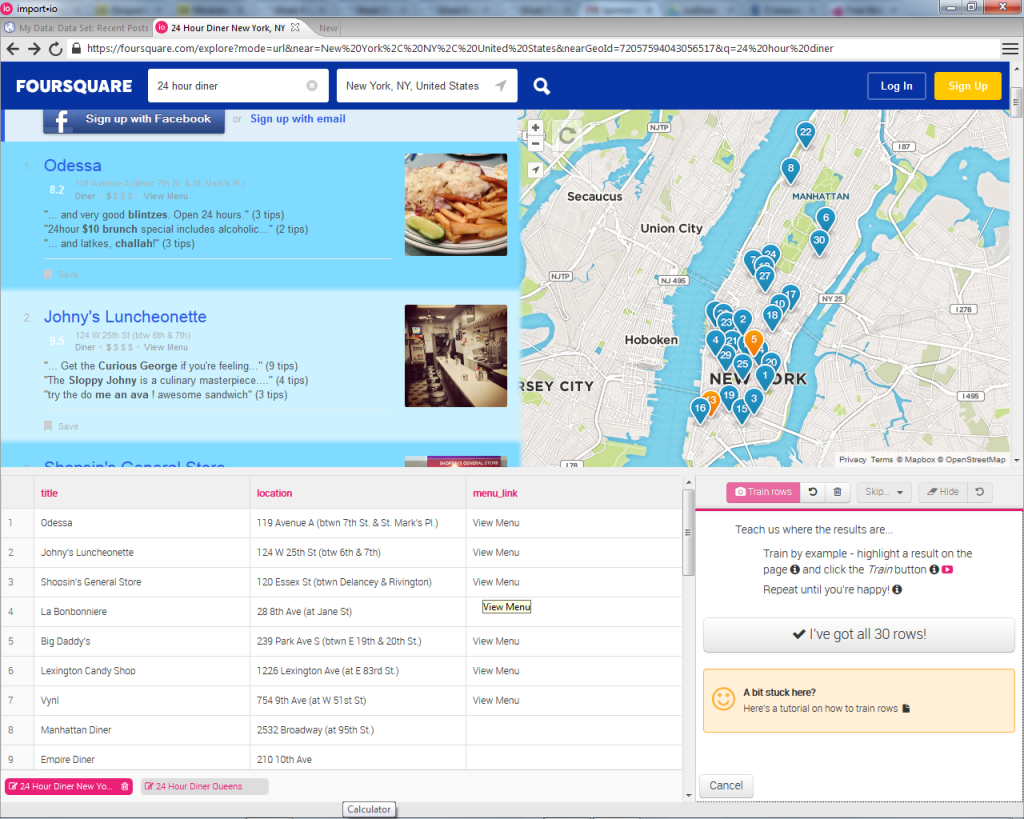

Let’s say that it’s a late night, you’re in New York City, you’re hungry, and you really like diners. What do you do if you want to find a few near you that are still open? Using import.io, you could build a web app that does this for you! How would that work?

Well, we’ve already seen how easy it is to get our data in an easy to use JSON format, and Foursquare is really good at finding 24-hour diners in NYC. So we could use import.io to gather all of the information about the diners, and then create a simple interface that lists the diners closest to our current location on a map with the help of the Google Maps API and the geolocation API provided by the browser.

If it sounds to good to be true, fear not! A similar project has already been created with import.io, and it worked so well that they dedicated a whole blog post to it!

2. Get statistics on your favorite website

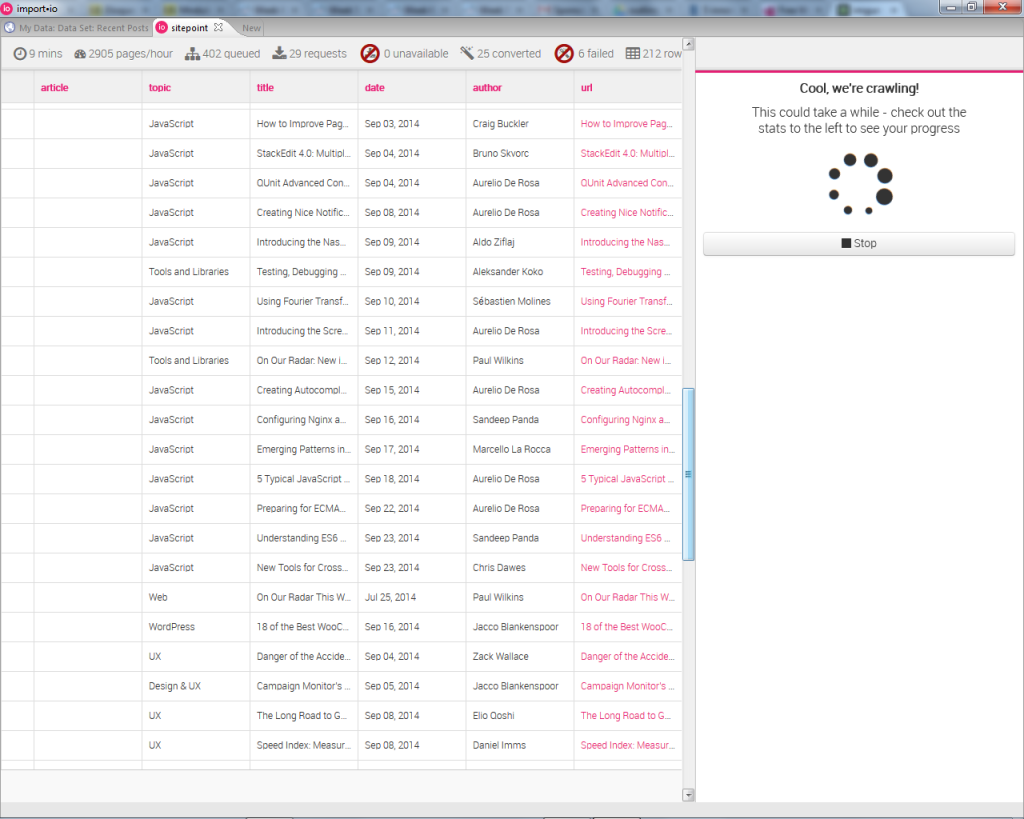

SitePoint posts a lot of articles every day. How would we get statistics on those articles, as well as their most common topic, average comment count, and most frequent writers?

Our first step would be to create a web crawler, the process for which is detailed here. We could then export the data provided by our crawler as JSON, and use javascript to create a set of functions that pushes the URLs, comment count numbers, author names, etc. into arrays and returns either an average of the counts, or the topics and author names that appear most frequently.

I set up a sample crawler to demonstrate this, and the process was quick and seamless.

Here’s a sample codepen that uses some of the JSON data from my Sitepoint crawler to list a few of the most recent (at the time) articles on the site.

See the Pen HGant by Tim (@tevko) on CodePen.

Take a look at Product Hunt Statistics, which uses an import.io web crawler to get user data from Product Hunt.

3. Freelancers, get leads

Are you a freelancer? Are you looking for more business? Of course you are. import.io can crawl websites to find people and companies that are looking for the services that you offer, and store their contact information in a spread sheet. Using that information is as simple as sending an email to each one of the people or companies in the spreadsheet. import.io has a blog post that explains this process. Their best advice: make sure the messages you send are personal. No one likes an automated email!

4. Make cool charts and graphs

Why make cool charts and graphs you ask? Cool charts and graphs are great to impress visitors to your portfolio, to help you learn new libraries like d3.js, or to show off to your fellow developer friends!

The Data Press recently used import.io to do this very thing, and the results are pretty impressive! They used MetaCritic and search result information to collect data on music festivals and the bands performing at them. Once all of the data was collected, they imported the data into Tableau to create a vizualization, embedded below.

5. Make Viral Content and Change the World

import.io created a dataset with all the men and women listed on Forbes’ list of The World’s Billionaires. Then they gave that data to Oxfam, who used that data to find deeper relationships between those who are well off, and those less fortunate. The Guardian picked up on this, and used the findings to write an interesting article about poverty in Britain. You can read more about the dataset and Oxfam on the import.io blog.

Conclusion

Data is everywhere, but difficulties in collection and organization often prevent us from using it to our advantage. With import.io, the headaches of finding and using large datasets are gone. Now making APIs, crawling the web, and using automation to collect data from an authenticated source, is a point and a click away.

Over to you: What would you build with easily aggregated web data?

Tim Evko is a front end web developer from New York, with a passion for responsive web development, Sass, and JavaScript. He lives on coffee, CodePen demos and flannel shirts.