Varnish Cache is an HTTP accelerator and reverse proxy developed by Danish consultant and FreeBSD core developer Poul-Henning Kamp, along with other developers at Norwegian Linpro AS. It was released in 2006.

According to Pingdom.com, a company focused on web performance, in 2012 Varnish was already famous among the world’s top websites for its capacity to speed up web delivery, and it was being used by sites such as Wired, SlideShare, Zappos, SoundCloud, Weather.com, Business Insider, Answers.com, Urban Dictionary, MacRumors, DynDNS, OpenDNS, Lonely Planet, Technorati, ThinkGeek and Economist.com.

It is licensed under a two-clause BSD license. Varnish has a premium tier, Varnish Plus, focused on enterprise customers, which offers some extra features, modules, and support.

Although there are other solutions that also shine, Varnish is still a go-to solution that can dramatically improve website speed, reduce the strain on the web application server’s CPU, and even serve as a protection layer from DDoS attacks. KeyCDN recommends deploying it on the origin server stack.

Varnish can sit on a dedicated machine in case of more demanding websites, and make sure that the origin servers aren’t affected by the flood of requests.

At the time of this writing (November 2017), Varnish is at version 5.2.

How it Works

Caching in general works by keeping the pre-computed outputs of an application in memory, or on the disk, so that expensive computations don’t have to be computed over and over on every request. Web Cache can be on the client (browser cache), or on the server. Varnish falls into the second category. It is usually configured so that it listens for requests on the standard HTTP port (80), and then serves the requested resource to the website visitor.

The first time a certain URL and path are requested, Varnish has to request it from the origin server in order to serve it to the visitor. This is called a CACHE MISS, which can be read in HTTP response headers, depending on the Varnish setup.

According to the docs,

when an object, any kind of content i.e. an image or a page, is not stored in the cache, then we have what is commonly known as a cache miss, in which case Varnish will go and fetch the content from the web server, store it and deliver a copy to the user and retain it in cache to serve in response to future requests.

When a particular URL or a resource is cached by Varnish and stored in memory, it can be served directly from server RAM; it doesn’t need to be computed every time. Varnish will start delivering a CACHE HIT in a matter of microseconds.

This means that neither our origin server or our web application, including its database, are touched by future requests. They won’t even be aware of the requests loaded on cached URLs.

The origin server — or servers, in case we use Varnish as a load balancer — are configured to listen on some non-standard port, like 8888, and Varnish is made aware of their address and port.

Varnish Features

Varnish is threaded. It’s been reported that Varnish was able to handle over 200,000 requests per second on a single instance. If properly configured, the only bottlenecks of your web app will be network throughput and the amount of RAM. (This shouldn’t be an unreasonable requirement, because it just needs to keep computed web pages in memory, so for most websites, a couple of gigabytes should be sufficient.)

Varnish is extendable via VMODS. These are modules that can use standard C libraries and extend Varnish functionality. There are community-contributed VMODS listed here. They range from header manipulation to Lua scripting, throttling of requests, authentication, and so on.

Varnish has its own domain-specific language, VCL. VCL provides comprehensive configurability. With a full-page caching server like Varnish, there are a lot of intricacies that need to be solved.

When we cache a dynamic website with dozens or hundreds of pages and paths, with GET query parameters, we’ll want to exclude some of them from cache, or set different cache-expiration rules. Sometimes we’ll want to cache certain Ajax requests, or exclude them from the cache. This varies from project to project, and can’t be tailored in advance.

Sometimes we’ll want Varnish to decide what to do with the request depending on request headers. Sometimes we’ll want to pass requests directly to the back end with a certain cookie set.

To quote the Varnish book,

VCL provides subroutines that allow you to affect the handling of any single request almost anywhere in the execution chain.

Purging the cache often needs to be done dynamically — triggered by publishing articles or updating the website. Purging also needs to be done as atomically as possible — meaning it should target the smallest possible scope, like a single resource or path.

This means that specific rules need to be defined, with their order of priority in mind. Some examples can be found in the Varnish book (which is available to read online or as a downloadable PDF).

Varnish has a set of tools for monitoring and administering the server:

There’s

varnishtop, which lets us monitor requested URLs and their frequency.varnishncsacan be used to print the Varnish Shared memory Log (VSL): it dumps everything pointing to a certain domain and subdomains.varnishhistreads the VSL and presents a live histogram showing the distribution of the last number of requests, giving an overview of server and back-end performance.varnishtestis used to test VCL configuration files and develop VMODS.varnishstatdisplays statistics about our varnishd instance:

varnishlogis used to get data about specific clients and requests.

Varnish Software offers a set of commercial, paid solutions either built on top of Varnish cache, or extending its usage and helping with monitoring and management: Varnish Api Engine, Varnish Extend, Akamai Connector for Varnish, Varnish Administration Console (VAC), and Varnish Custom Statistics (VCS).

Installing Varnish

The Varnish docs cover installation on various systems. We’ll go with Ubuntu 16.04 LTS in this post.

Packagecloud.io has instructions for updating the Ubuntu repositories and installing Varnish version 5:

curl -L https://packagecloud.io/varnishcache/varnish5/gpgkey | sudo apt-key add -

sudo apt-get update

sudo apt-get install -y apt-transport-https

Then we add the following lines to the newly created file /etc/apt/sources.list.d/varnishcache_varnish5.list:

deb https://packagecloud.io/varnishcache/varnish5/ubuntu/ xenial main

deb-src https://packagecloud.io/varnishcache/varnish5/ubuntu/ xenial main

Then we run:

sudo apt-get update

sudo apt-get install varnish

We can test a brand-new WordPress installation running on Nginx. First, we change Nginx’s default listening port from 80 to 8080 — which is the port Varnish expects the back end to be running on — by adding the following lines to the Nginx virtual host, inside the server clause:

server {

listen 127.0.0.1:8080 default_server;

listen [::]:8080 default_server;

Then we configure Varnish: we edit /etc/default/varnish, replacing port 6081 with 80 (the default web port):

DAEMON_OPTS="-a :80 \

-T localhost:6082 \

-f /etc/varnish/default.vcl \

-S /etc/varnish/secret \

-s malloc,256m"

We also need to change /lib/systemd/system/varnish.service, making the same replacement:

[Service]

Type=simple

LimitNOFILE=131072

LimitMEMLOCK=82000

ExecStart=/usr/sbin/varnishd -j unix,user=vcache -F -a :80 -T localhost:6082 -f /etc/varnish/default.vcl -S /etc/varnish/secret -s malloc,256m

ExecReload=/usr/share/varnish/reload-vcl

ProtectSystem=full

ProtectHome=true

PrivateTmp=true

PrivateDevices=true

Then we restart Nginx and Varnish:

sudo service nginx restart

sudo /etc/init.d/varnish restart

Warning: due to some peculiarities, Varnish usually must be restarted — or started this way, not with service varnish start — in order to read all the config files we edited.

We tested the website speed and responsiveness with Locust and Pingdom Tools.

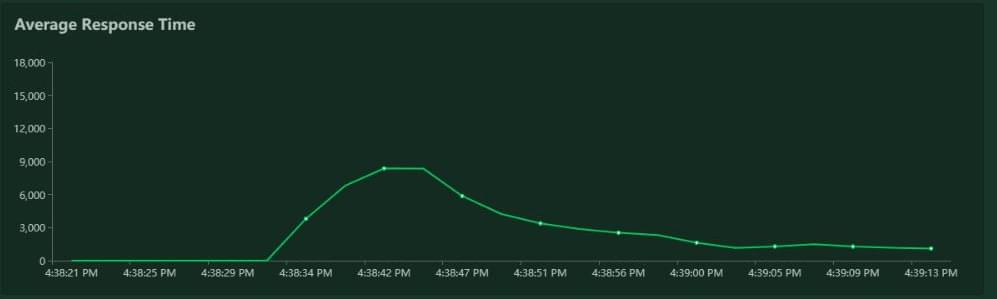

Once the cache was warmed up, the difference was impressive, despite Nginx being well known for its speed: the average number of requests per second was multiplied by three to four times, and response time were greatly reduced. Load times were a bit higher due to network latency, since we tested the website hosted in California from a workstation in Europe.

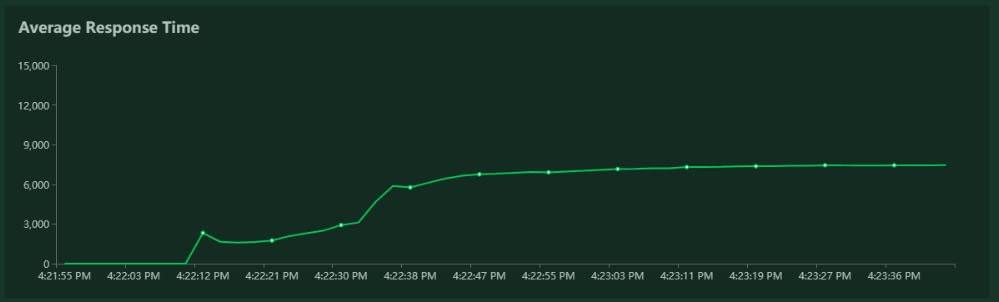

Locust results for Nginx:

Locust results for Nginx + Varnish:

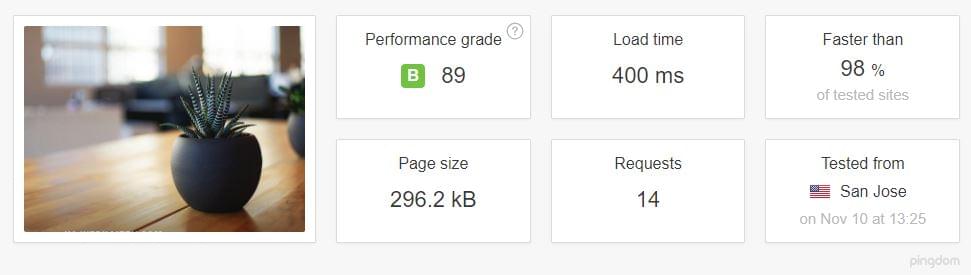

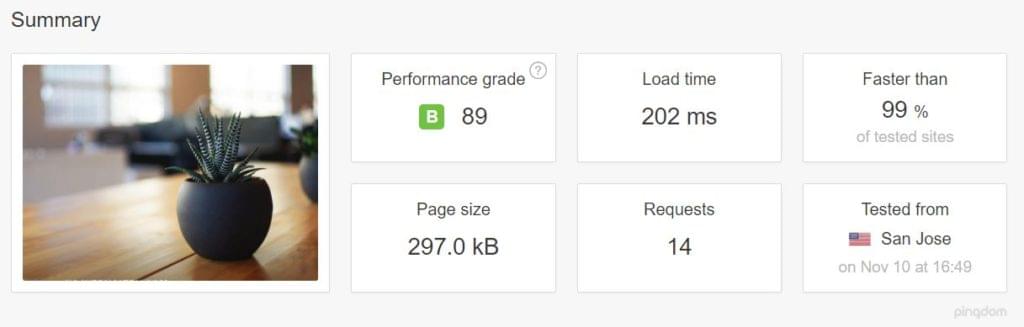

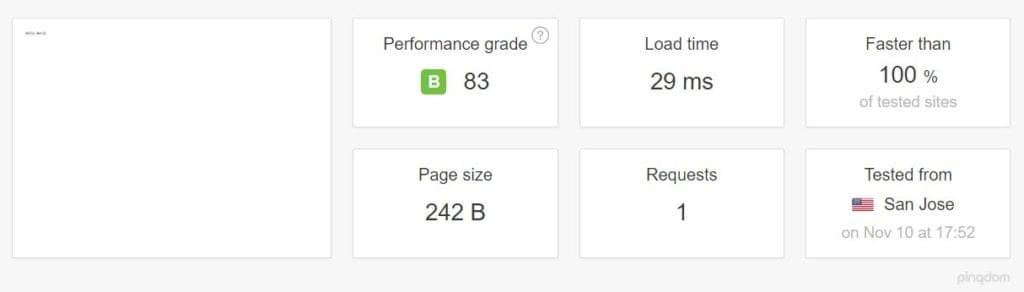

Pingdom results were also good.

Pingdom results for Nginx stack, tested from California:

Pingdom results for Nginx + Varnish, California:

Notice also the TTFB for each case.

Nginx alone:

Nginx + Varnish:

Even if we neglect the pink part, which is the DNS lookup, there is still an obvious difference.

Simplicity of Setup

Varnish doesn’t care what’s listening on port 8080 (we can change this default port as well, if required). This means that setting up Apache, or some other application server, should be just as straightforward: all we need to do is to configure them to listen on port 8080 instead of 80.

Setting up Varnish with NodeJS

On our existing server, where we had already installed Varnish, setting up a hello-world Node app was just as simple. We installed the nodejs and npm packages and linked NodeJS to Node:

ln -s /usr/bin/nodejs /usr/bin/node

Then we created a simple node hello-world program listening on port 8080:

#!/usr/bin/env nodejs

var http = require('http');

http.createServer(function (req, res) {

res.writeHead(200, {'Content-Type': 'text/plain'});

res.end('Hello World\n');

}).listen(8080, 'localhost');

console.log('Server running at http://localhost:8080/');

Then we installed Node’s package manager, PM2, to be able to daemonize our app:

sudo npm install -g pm2

pm2 start index.js

aAnd voila — our Node app was being served by Varnish:

Other Tips

To be able to control whether or not our request is being cached in our browser inspector, we ought to add the following snippet to our Varnish config file, into the sub vcl_deliver block:

sub vcl_deliver {

if (obj.hits > 0) {

set resp.http.X-Cache = "HIT";

} else {

set resp.http.X-Cache = "MISS";

}

}

Then we can see the feedback in our response headers as HIT or MISS:

One more warning: Varnish (or at least the open-source version) doesn’t support SSL, reiterated again by its creator Poul-Henning Kamp (who is not shy to voice his opinions). So when you need to use Varnish and HTTPS, consider using another proxy in front of it for SSL termination — such as haproxy, or Varnish’s own hitch.

Or, if that’s getting too involved, just use Nginx and FastCGI Cache.

Conclusion

In this article we tried to give a brief introduction to Varnish Cache without going too deeply into its setup, monitoring and administration.

Tuning server performance is a science of its own, and presenting the full scope of use cases and setups requires another article. I’ll be diving a bit deeper into this subject in another article, so stay tuned for a future installment, where I’ll add Varnish in front of a real app.

Frequently Asked Questions (FAQs) about Boosting Server Performance with Varnish

What is Varnish and how does it improve server performance?

Varnish is a powerful open-source HTTP accelerator designed to enhance the performance of web servers. It works by storing a copy of a page served by the web server the first time a user visits that page. When the same user or another user requests the same page, Varnish will serve the cached copy instead of requesting the page from the server again. This significantly reduces the server load and increases the speed at which the page is served to the user, thereby improving the overall performance of the server.

How does Varnish compare to other caching solutions?

Varnish stands out from other caching solutions due to its flexibility and speed. It uses an advanced caching algorithm known as Edge Side Includes (ESI), which allows for high-level cache management. This means that even dynamic pages with constantly changing content can be cached and served quickly. Additionally, Varnish is designed to handle thousands of requests per second, making it an excellent choice for high-traffic websites.

How can I install and configure Varnish on my server?

Varnish can be installed on your server using the package manager for your operating system. Once installed, you can configure Varnish by editing the default.vcl file, which is the main configuration file for Varnish. This file allows you to specify how Varnish should handle requests and responses, and how it should cache content.

Can Varnish be used with Node.js and Nginx?

Yes, Varnish can be used in conjunction with Node.js and Nginx. In this setup, Node.js would handle the application logic, Nginx would serve static files, and Varnish would cache and serve dynamic content. This allows for a highly scalable and performant web application.

What are some common issues that can arise when using Varnish and how can they be resolved?

Some common issues that can arise when using Varnish include cache misses and high backend load. These issues can often be resolved by fine-tuning your Varnish configuration. For example, you can adjust the TTL (Time To Live) for cached objects to reduce backend load, or use Varnish’s built-in tools to analyze cache performance and identify areas for improvement.

How can I monitor the performance of Varnish?

Varnish provides several tools for monitoring its performance, including varnishstat, varnishlog, and varnishhist. These tools provide real-time statistics and logs, allowing you to monitor cache hit rates, request rates, and other important metrics.

Can Varnish handle SSL/TLS connections?

Varnish does not natively support SSL/TLS connections. However, it can be used in conjunction with other tools, such as Nginx or Hitch, to handle SSL/TLS connections. In this setup, Nginx or Hitch would handle the SSL/TLS connection, and then pass the unencrypted request to Varnish.

How does Varnish handle cookies?

By default, Varnish does not cache responses that include a Set-Cookie header, and it does not serve cached content to requests that include a Cookie header. However, this behavior can be customized in the VCL file. For example, you can configure Varnish to strip cookies from certain requests, or to cache content even if it includes a Set-Cookie header.

Can Varnish be used in a load-balanced environment?

Yes, Varnish can be used in a load-balanced environment. In fact, Varnish can even act as a load balancer itself, distributing requests among multiple backend servers based on their load or other factors.

How can I purge or invalidate cached content in Varnish?

Varnish provides several methods for purging or invalidating cached content, including the PURGE HTTP method and the BAN command. These methods allow you to remove specific content from the cache, ensuring that users always see the most up-to-date content.

Tonino Jankov

Tonino JankovTonino is a web developer and IT consultant who's dived through open-source code for over a decade. He's also a crypto enthusiast, Linux fan, and moderate libertarian.